Welcome to the Duckietown Developer

Manual

Contents

Basics

Basics - Terminal

Basics - Development

Beginner

Beginner - The DTProject

Beginner - Use ROS

Beginner - Python Library

Beginner - Code Hierarchy

Beginner - Documentation

Intermediate

The DTROS class

Intermediate - Diagnostics

Simulation in Duckietown

Basics - Terminal

Working over the terminal is a skill that every roboticist-to-be needs to acquire. It enables you to work

on remote agents or computers without the need for a graphical user interface (GUI) and lets you work

very efficiently. Once you get the hang of it, you will find out for yourself how it can make your life

easier.

Using a terminal

It makes sense to learn how to use the terminal very well, as it will save you a lot of time along the way.

If you are completely new to working with a terminal, often also called “console” or “command line”, an

official beginners tutorial can be found on the Ubuntu website.

If you are looking for an extensive list of commands that can be used from the terminal, this is the place

to look at.

Using the Duckietown Shell

The Duckietown Shell, or dts for short, is a pure Python, easily distributable (few dependencies) utility

for Duckietown.

What you will need Laptop setup

Duckietown account

What you will get Know how to use a terminal

The idea is that most of the functionalities are implemented as Docker containers, and dts provides a

nice interface for that, so that users should not type a very long docker run command line. These

functionalities range from calibrating your Duckiebot and running demos to building this

Duckumentation and submitting agents to open challenges and monitoring your evaluations and

leaderboard positioning.

If you followed all the steps in the laptop setup, you already installed dts. If not, now is the time to go

back and do it.

Basics - Development

This section of the book will introduce the basics of software development in Duckietown. The

arguments presented in this section are very general and should be clear to any developer (not just in

Duckietown).

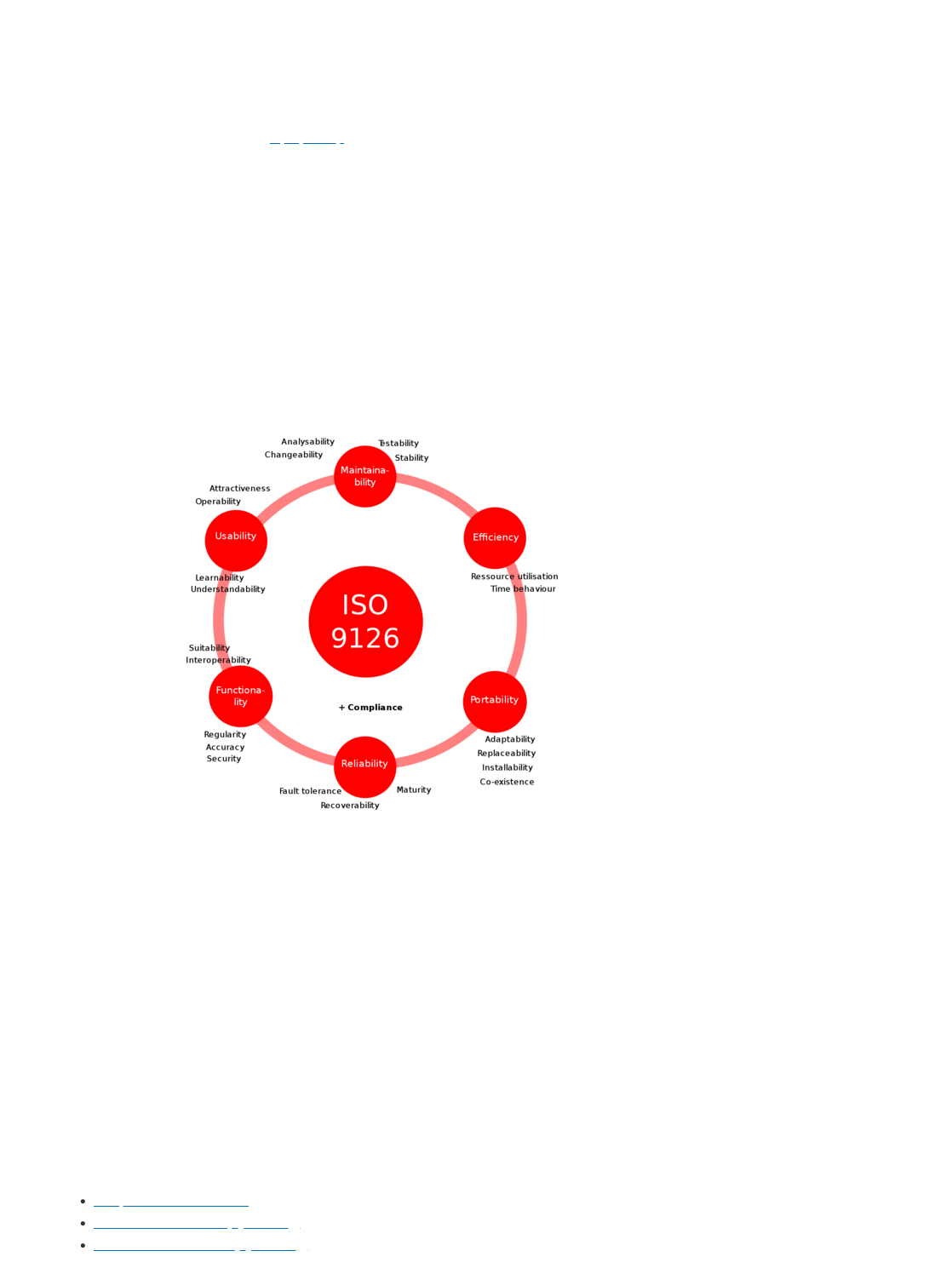

ISO/IEC 9126

ISO/IEC 9126 is a international standard for product quality in Software Engineering. It was officially

replaced by the new ISO/IEC 25010 in 2011 that introduces a few minor changes.

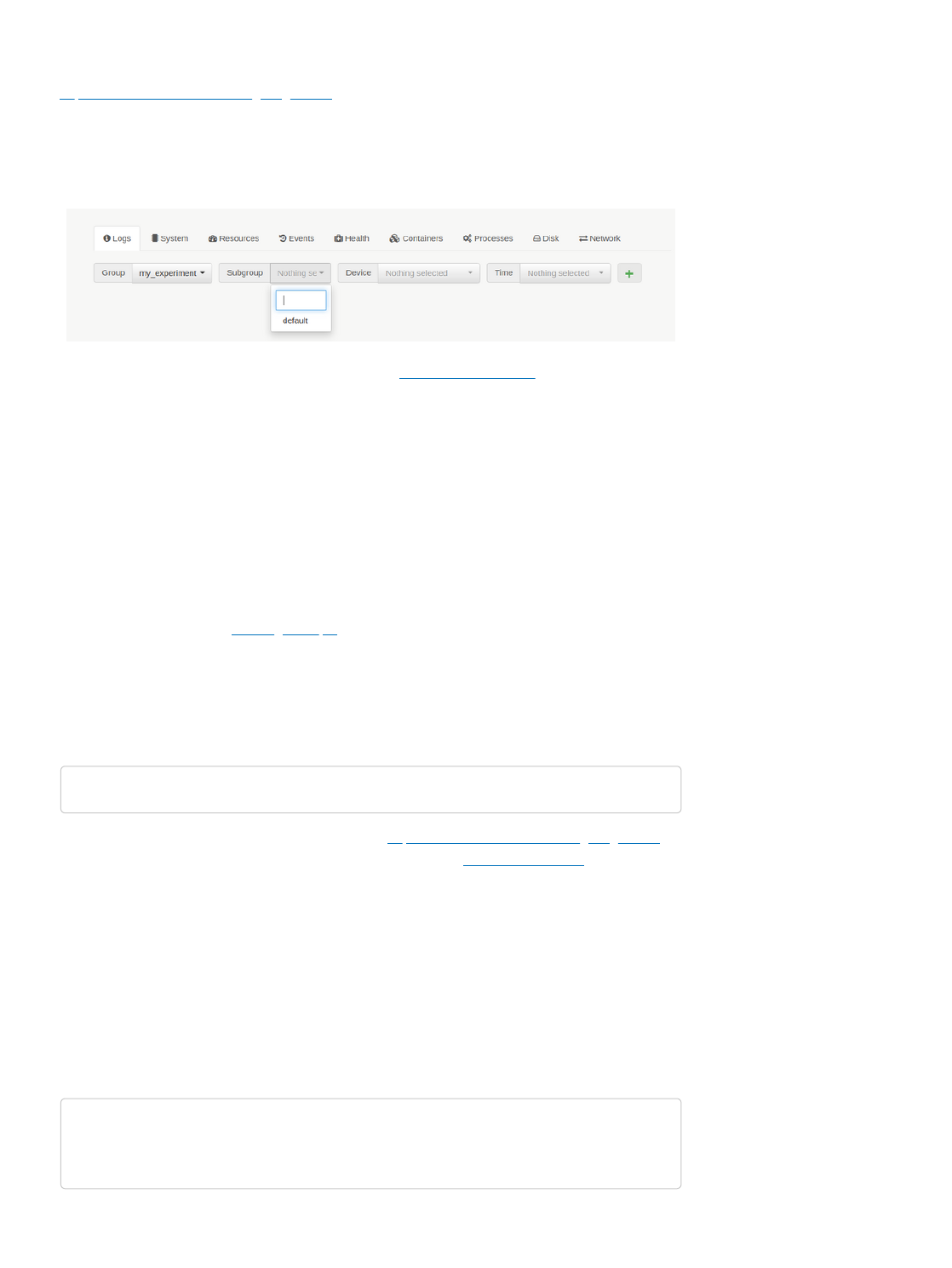

Fig. 1 ISO/IEC 9126 Standard (source: Wikipedia)

Software development, as any other activities carried out by human beings is subject to human biases.

The ISO/IEC 9126 standard’s objective is that of aknowledging the most common biases and

addressing them by definining clear guidelines about what properties a good software product should

have.

In this section, we are not going to dive into this standard, but we strongly believe that such standard

(and its successor ISO/IEC 25010) should be the best friend of any developer.

Throughout this book, we will mention some of these qualities as we motivate some of the decisions

made while creating the Duckietown Development Workflow.

Hands on

We suggest the reader to get familiar with such standard by using these resources:

Wikipedia - ISO/IEC 9126

Official ISO/IEC 9126 (by ISO.org)

Official ISO/IEC 25010 (by ISO.org)

Ask the community

If you have any questions about good practices in software development, join the Duckietown Slack.

Linux

This section of the book will introduce Linux distributions and specifically the Ubuntu distribution. We

will provide guides for installing Ubuntu in dual-boot mode or inside a virtual machine.

Linux

Linux is a group of free and open-source software operating systems built around the Linux kernel first

released in 1991. Typically, Linux is packaged in a form known as a Linux distribution such as Fedora

or Ubuntu.

Ubuntu is the Linux distribution officially supported by the Duckietown community.

Ubuntu

As of this writing, the most recent version of Ubuntu is 22.04 LTS (Long Term Service) which will be

supported until April 2032.

Installation

It is highly recommended to install Ubuntu directly on your laptop or as a dual boot operating system

alongside your existing OS. However we also provide some guidance on installing Ubuntu within a

Virtual Environment on your laptop.

Dual Boot

First you need to download a .iso image file which contains the version of Ubuntu you want.

Here is 22.04 LTS make sure to download the desktop image.

Next, you need a free USB drive with at least 2GB of space. The drive will be completely written

over.

You need some software to write the .iso to the USB. If on Windows you can use Rufus

Create the bootable USB drive, disconnect the USB then reconnect to your computer.

Restart your computer

If your computer simply boots into the existing operating system you need to change the

boot order in your BIOS.

Restart your computer again and press the button during startup which lets you into the

BIOS. It may say on your computer what this button is but you may need to Google

depending on your laptop model. For example Lenovo might be F1 or F2.

Look for an option to change boot order and put priority on your USB drive.

Your computer should now boot into Ubuntu installation and you can follow the instructions for

dual boot.

Virtual Machine

First you need to download a .iso image file which contains the version of Ubuntu you want. Here

is 22.04 LTS make sure to download the desktop image.

Download your desired Virtual Machine platform (popular choices are Virtual Box and VMWare).

Note: Using a Virtual Machine might require some particular settings for you networking settings. The

virtual machine should appear as a device on your local network. For example, in VirtualBox, you need

to set up a Bridged Network. This might differ in other hypervisors.

Terminal

Some pointers:

Open a terminal with Ctrl + Alt + T

/ is the top level root directoy which contains your

~ refers to your home folder located in /home/[username]

Hands on

We suggest that you install a Linux distribution on your computer and get familiar with it before

proceeding to the next sections.

Ask the community

If you have any questions about good practices in installing Ubuntu on your computer or other

questions about Ubuntu, join and ask on the Duckietown Slack!

Git

Every time there is a large project, with many contributors and the need for code versioning and history,

developers rely on VCS (Version Control Systems) tools. Duckietown uses Git as VCS and GitHub.com

as a service provider for it. The Duckietown organization page on GitHub is github.com/duckietown.

Monolithicity VS Modularity

Whether a software project should be monolithic or modular is one of the most debated decisions that

a group of developers faces at the beginning of a software project. Books have been written about it.

Duckietown started as a monolithic project, and some of us still remember the infamous Software

repository, and only later transitioned to a full modular approach.

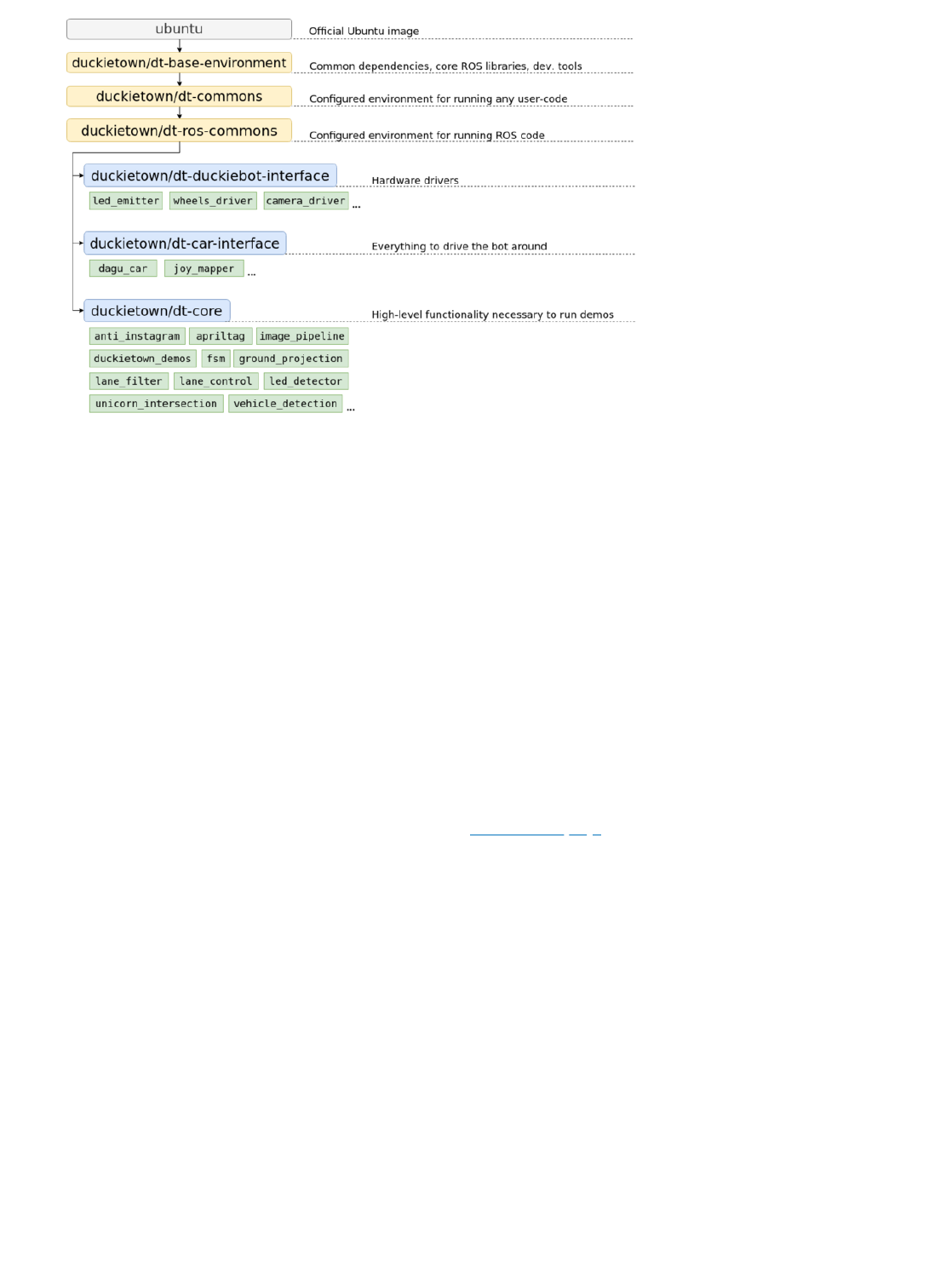

There are two levels of modularity in Duckietown. We distinguish between Modules and Nodes.

Modules form our first and highest level of modularity, with each module being a collection of nodes.

Nodes constitute the smallest software entities, and each node is usually responsible for a very specific

task. Nodes are not allowed to exist outside modules. We will revisit these concepts later in the book,

but in a nutshell, modules represent high level concepts, like autonomous driving capability for a

vehicle, while nodes within a module tackle more granular tasks, like traffic signs detection.

In Duckietown, code is separated so that each module has its own repository. All official repositories

are hosted under the same GitHub organization github.com/duckietown. Be brave, (as of April 2020)

we have more than 220 repositories there. You can also have your own modules hosted as repositories

on your own GitHub account.

Git

This section goes through the most common operations you can perform on a git project and a git

project hosted on GitHub.com.

Terminology

A non-exhaustive list of terms commonly used in git follow.

Repository

A repo (short for repository), or git project, encompasses the entire collection of files and folders

associated with a project, along with each file’s revision history.

Branch

Branches constitute threads of changes within a repository. Though we call them branches, do not try

to force an analogy with tree branches, they are similar in spirit but quite different in how they work.

A repository is not allowed to exist without a branch, and every operation inside a repository only

makes sense in the context of a branch (the active branch). Inside a repository, you can have as many

branches as you want, but you always work on one branch at a time. Every git project has at least one

main branch, usually called the master branch.

Use the command git branch to see the list of branches present in your repository and which branch

you are currently working on.

Though, branches are used in different scenarios, they simply allow groups of developpers to work on

their own task without having their work affect or be affected by other groups’ work. For example, after

a project is released with version 1.0.0, one team is tasked to develop a new feature for the version

1.1.0 milestone while another team is asked to fix a bug that a user reported and whose patch will be

released in the version 1.0.1.

Branch operations are performed through the command git branch.

Commit

A commit is an atomic change in a repository. A commit is a set of changes to one or more files within

your repository. Each commit is uniquely identified within a repository by the hash (SHA-1) of the

changes it contains (“plus” a header).

When you create/delete/edit one or more files in a git repository and you are confident enough about

those changes, you can commit them using the command git commit.

Note: A commit is not a snapshot (or a copy) of the entire repository at a given point in time. Each

commit contains only the incremental difference from the previous commit, called delta in git.

A chain of commits in which all the ancestors are included makes a branch. Since every commit is

linked to its parent, a branch is simply a pointer to a commit (the full chain of commits can always be

reconstructed from the commit). In other words, you can think of branches as human friendly labels for

commits. Every time you create a new commit, the pointer of the current branch advances to the newly

created commit.

Tag

A tag is a human friendly name for a commit but unlike branches, tags are read-only. Once created,

they cannot be modified to point to a different commit.

Tags are commonly used for labeling commits that constitute milestones in the project development

timeline, for example a release.

Fork

A fork is basically a copy of someone else’s repository. Usually, you cannot create branches or change

code in other people’s repositories, that’s why you create your own copy of it. This is called forking.

Remote

A git remote is a copy of your repository hosted by a git service provider, e.g. GitHub. Remotes allow

you to share your commits and branches so that other developers can fetch them. Technically

speaking, remotes are exactly the same as local repositories, but unlike your local repository, they are

reachable over the internet.

You can use the commands git fetch and git push to bring your local copy of the repository in sync

with a remote, by downloading commits or uploading new commits respectively.

Merging branches

Merging is the dual operation of creating a new branch. Imaigne you have branched out a new branch

(e.g. new-feature) from the some branch (e.g. master), made some improvements and tested them out.

Now you want to incorporate these changes in the master branch which hosts your main code. The

merge operation does exactly that. It takes the changes done in new-feature and applies them to

master.

Often git will manage to apply these changes by itself. However, sometimes if both new-feature and

master changed the same part of the code, git cannot determine by itself which of the two changes

should be kept. Such a case is called merge conflict and you will have to manually select what should

be kept after the merge.

Pull Requests

If you are working on a secondary branch or if you forked a repository and want to submit your

changes for integration into the mainstream branch or repository, you can open a so-called Pull

Request (in short PR).

A pull request can be seen as a three-step merge operation between two branches where the changes

are first proposed, then discussed and adapted (if requested), and finally merged.

Common operations

Fork a repository on GitHub

To fork (creating a copy of a repository, that does not belong to you), you simply have to go to the

repository’s webpage and click fork on the upper right corner.

Clone a repository

Cloning a repository is the act of creating a local copy of a remote repository. A repo is cloned only at

the very beginning, when you still don’t have a local copy of it.

To clone a repository, either copy the HTTPS or SSH link given on the repository’s webpage. Use the

following command to create a local copy of the remote git repository identified by the given URL.

This will create a directory in the current working path with the same name of the repository and the

entire history of commits will be downloaded onto your computer.

Create a new branch

The command for creating a new branch is a little bit counter-intuitive, but you will get use to it. Use the

following command to create a new branch:

This creates a new branch pointing at the same commit your currently active branch is pointing at. In

other words, you will end up with two branches pointing at the same commit. Note that after you issue

this command, the newly created branch becomes your active branch.

Working tree

In git, we use the term working tree to indicate all the changes that are not committed yet. You can

think of it as your workspace. When you create a new commit, the hash for the current working tree is

computed and assigned to the new commit together with the changes since the last commit. The

working tree clears as you commit changes.

Remark: You cannot create commits from a clean working tree.

Use the command git status to inspect the status of your working tree.

Create a new commit

Unlike many git operations, a commit is not created by a single git command. There are two steps to

follow. First, we mark all the changes that we want to be part of our new commit, second, we create the

commit. From your working tree, mark changes to include in the new commit using the command:

The command git status will always show you which changes are marked to be used for a new

commit and which changes are not. Use the command

to create a new commit. Replace [COMMIT-MESSAGE] with your notes about what changes this commit

includes.

Note: Do not underestimate the value of good commit messages, the moment you will go back to your

history of commits looking for a change of interest, having good commit messages will be a game

changer.

git clone [REPOSITORY-URL]

git checkout -b [NEW-BRANCH-NAME]

git add [FILE]

git commit -m "[COMMIT-MESSAGE]"

Push changes

Use the following command to push your local changes to the remote repository so that the two

repositories can get in sync.

Fetch changes

If you suspect that new changes might be available on the remote repository, you can use the

command

to download the new commits available on the remote (if any). These new changes will be appended to

the branch called origin/[BRANCH-NAME] in your local repository. If you want to apply them to your

current branch, use the command

Use the command git pull origin/[BRANCH-NAME] to perform fetch and then merge.

Delete branches

Unlike the vast majority of git commands, git delete does not work on the current branch. You can

delete other branches by running the command

If you want to delete your current branch, you will need to checkout another branch first. This prevents

ending up with a repository with no branches.

To propagate the deletion of a branch to the remote repository, run the command:

Open a GitHub Issue

If you are experiencing issues with any code or content of a repository (such as this operating manual

you are reading right now), you can submit issues. For doing so go to the dashboard of the

corresponding repository and press the Issues tab where you can open a new request.

For example you encounter a bug or a mistake in this operating manual, please visit this repository’s

Issues page to report an issue.

GitHub Issues are a crucial part of the life cycle of a software product, as they constitute a feedback

loop that goes directly from the end-user to the product developers. You don’t have to be a developer

or an expert in software engineering to open an Issue.

Hands on

Git

It is strongly suggested to all git beginners to follow the awesome tutorial Learn Git Branching.

Further reading material can be found at the following links:

Git Handbook

Basic Branching and Merging

GitHub

You can gain access to GitHub by creating an account on github.com (if you don’t have one already).

git push origin [BRANCH-NAME]

git fetch origin [BRANCH-NAME]

git merge origin/[BRANCH-NAME]

git branch -d [BRANCH-NAME]

git push origin --delete [BRANCH-NAME]

A short GitHub tutorial is available at this link.

It is higly suggested that you setup an SSH key for secure passwordless access to GitHub by following

these steps:

1. Generate a new SSH key

2. Add SSH key to your GitHub account.

Ask the community

If you have any questions about how to use of Git in Duckietown, join and ask on the Duckietown

Slack!

Docker

This section will introduce Docker and the features of Docker that the Duckietown community employs.

For a more general introduction to Docker, we suggest reading the official Docker overview page.

What is Docker?

Docker is used to perform operating-system-level virtualization, something often referred to as

“containerization”. While Docker is not the only software that does this, it is by far the most popular

one.

Containerization refers to an operating system paradigm in which the kernel allows the existence of

multiple isolated user space instances called containers. These containers may look like real

computers from the point of view of programs running in them.

A computer program running on an ordinary operating system can see all resources available to the

system, e.g. network devices, CPU, RAM; However, programs running inside of a container can only

see the container’s resources. Resources assigned to the container become thus available to all

processes that live inside that container.

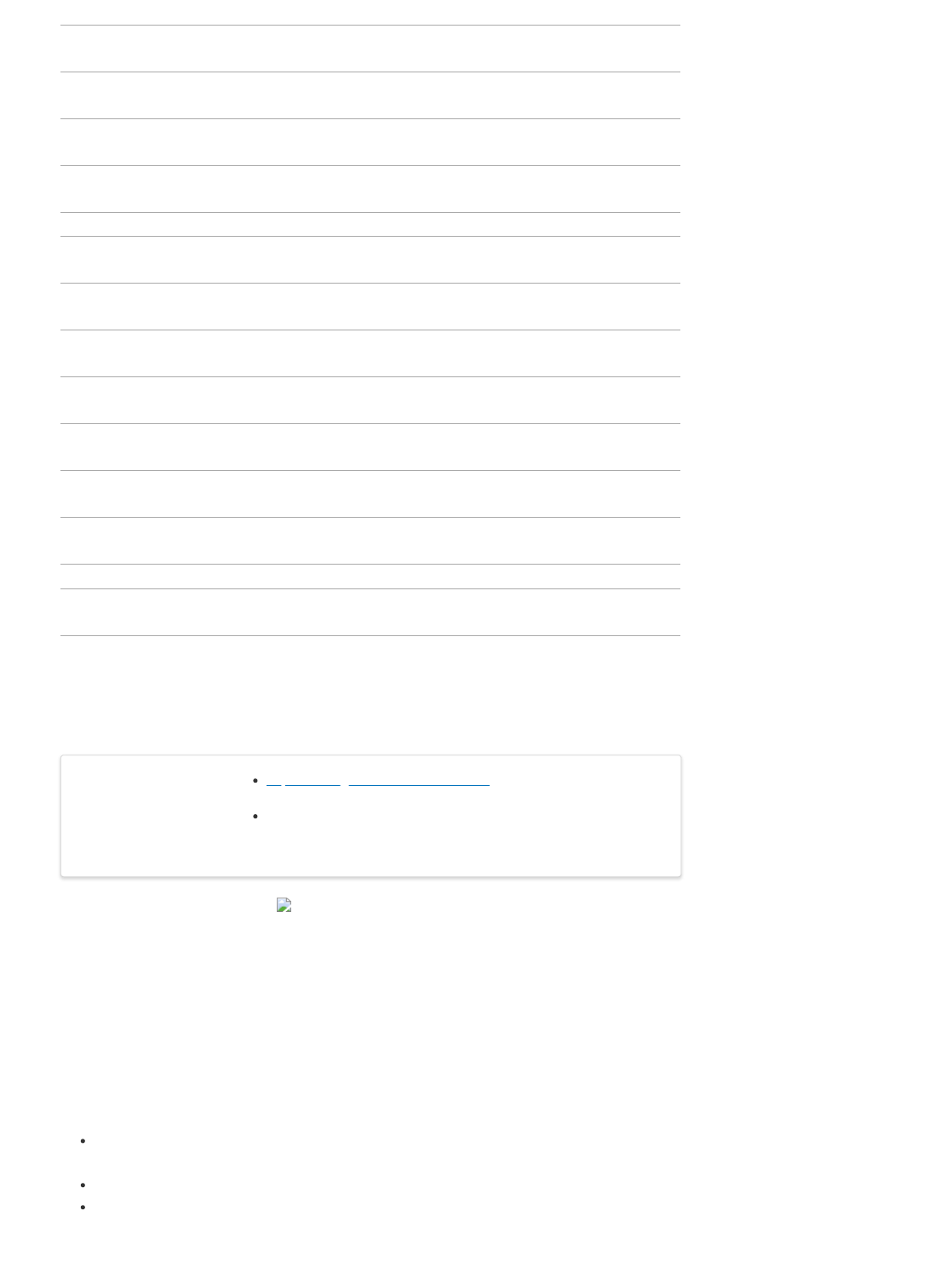

Containers VS. Virtual Machine

Containers are often compared to virtual machines (VMs). The main difference is that VMs require a

host operating system (OS) with a hypervisor and a number of guest OSs, each with their own libraries

and application code. This can result in a significant overhead.

Imagine running a simple Ubuntu server in a VM on Ubuntu: you will have most of the kernel libraries

and binaries twice and a lot of the processes will be duplicated on the host and on the guest OS.

Containerization, on the other hand, leverages the existing kernel and OS and adds only the additional

binaries, libraries and code necessary to run a given application. See the illustration bellow.

Fig. 2 Differences between Virtual Machines and Containers (source: Weaveworks)

Because containers don’t need a separate OS to run they are much more lightweight than VMs. This

makes them perfect to use in cases where one needs to deploy a lot of independent services on the

same hardware or to deploy on not-especially powerful platforms, such as Raspberry Pi - the platform

the Duckietown community uses.

Containers allow for reuse of resources and code, but are also very easy to work with in the context of

version control. If one uses a VM, they would need to get into the VM and update all the code they are

using there. With a Docker container, the same process is as easy as pulling the container image

again.

How does Docker work?

You can think that Docker containers are build from Docker images which in turn are build up of Docker

layers. So what are these?

Docker images are build-time artifacts while Docker containers are run-time constructs. That means

that a Docker image is static, like a .zip or .iso file. A container is like a running VM instance: it starts

from a static image but as you use it, files and configurations might change.

Docker images are build up from layers. The initial layer is the base layer, typically an official stripped-

down version of an OS. For example, a lot of the Docker images we run in Duckietown have

ubuntu:18.04 as a base.

Each layer on top of the base layer constitutes a change to the layers below. The Docker internal

mechanisms translate this sequence of changes to a file system that the container can then use. If one

makes a small change to a file, then typically only a single layer will be changed and when Docker

attempts to pull the new version, it will need to download and store only the changed layer, saving

space, time and bandwidth.

In the Docker world, images get organized by their repository name, image name and tags. As with Git

and GitHub, Docker images are stored in image registers. The most popular Docker register is called

DockerHub and it is what we use in Duckietown.

An image stored on DockerHub has a name of the form:

The parts repository and tag are optional and they default to library (indicating Docker official

images) and latest (special tag always pointing to the latest version of an image). For example, the

Duckietown Docker image

has the repository name duckietown, the image name dt-core, and the tag ente-arm64v8, which

carries both the name of the Duckietown software distribution that the image contains, i.e., ente, and

the CPU architecture that this image is targeting, i.e., arm64v8. We will talk about different CPU

architectures and why they need to be part of the Docker image tag in the section .

All Duckietown-related images are in the duckietown repository. Though images can be very different

from each other and for various applications.

Different CPU architectures

Since Docker images contain binaries, they are not portable across different CPU architectures. In

particular, binaries are executable files that are compiled to the level of CPU instructions. Different CPU

architectures present different instructions sets.

Many modern computers use the amd64 architecture, used by almost all modern Intel and AMD

processors. This means that it is very likely that you can find a Docker image online and run it on your

computer without having to worry about CPU architectures.

In Duckietown, we use low-end computers like the Raspberry Pi (officially used on any Duckietown

device) and Nvidia Jetson. These low-cost computers employ Arm processors that are based on the

arm32v7 instructions set.

[repository/]image[:tag]

duckietown/dt-core:ente-arm64v8

Note: Full disclosure, while all devices officially supported in Duckietown are based on 64-bit capable

Arm processors, thus using the arm64v8 instructions set, the Raspbian OS only supports 32-bit, which

is the reason why we use arm32v7 images.

Working with images

If you want to get a new image from a Docker registry (e.g. DockerHub), you have to pull it. For

example, you can get an Ubuntu image by running the command:

According to , this will pull the image with full name library/ubuntu:latest which, as of May 2020,

corresponds to Ubuntu 20.04.

You will now be able to see the new image pulled by running:

If you don’t need it anymore or you are running out of storage space, you can remove an image with,

You can also remove images by their IMAGE ID as printed by the list command above. A shortcut for

docker image rm is docker rmi.

Sometimes you might have a lot of images you are not using anymore. You can easily remove them all

with:

This will remove all images that are not supporting any container. In fact, you cannot remove images

that are being used by one or more containers. To do so, you will have to remove those containers first.

If you want to look into the heart and soul of your images, you can use the commands docker image

history and docker image inspect to get a detailed view.

Working with containers

Containers are the run-time equivalent of images. When you want to start a container, Docker picks up

the image you specify, creates a file system from its layers, attaches all devices and directories you

want, “boots” it up, sets up the environment up and starts a pre-determined process in this container.

All that magic happens with you running a single command: docker run. You don’t even need to have

pulled the image beforehand, if Docker can’t find it locally, it will look for it on DockerHub.

Here’s a simple example:

This will take the ubuntu image with latest tag and will start a container from it.

The above won’t do much. In fact, the container will immediately exit as it has nothing to execute.

When the main process of a container exits, the container exits as well. By default the ubuntu image

runs bash and as you don’t pass any commands to it, it exits immediately. This is no fun, though.

Let’s try to keep this container alive for some time by using the -it flags. This tells Docker to create an

interactive session.

Now you should see something like:

docker pull ubuntu

docker image list

docker image rm ubuntu

docker image prune

docker run ubuntu

docker run -it ubuntu

Keep in mind that the part after @ will be different — that is your container ID.

In this manual, we will use the following icon to show that the command should be run in the container:

You are now in your new ubuntu container! Try to play around, you can try to use some basic bash

commands like ls, cd, cat to make sure that you are not in your host machine.

You can check which containers you are running using the docker ps command — analogous to the

ps command. Open a new terminal window (don’t close the other one yet) and type:

An alternative (more explicit) syntax is

These commands list all running containers.

Now you can go back to your ubuntu container and type exit. This will bring you back to you host shell

and will stop the container. If you again run the docker ps command you will see nothing running. So

does this mean that this container and all changes you might have made in it are gone? Not at all,

docker ps and docker container list only list the currently running containers.

You can see all containers, including the stopped ones with:

Here -a stands for all. You will see you have two ubuntu containers here. There are two containers

because every time you use docker run, a new container is created. Note that their names seem

strangely random. We could have added custom, more descriptive names—more on this later.

We don’t really need these containers, so let’s get rid of them:

You need to put your container names after rm. Using the containr IDs instead is also possible. Note

that if the container you are trying to remove is still running you will be asked to stop it first.

You might need to do some other operations with containers. For example, sometimes you want to

start or stop an existing container. You can simply do that with:

Imagine you are running a container in the background. The main process is running but you have no

shell attached. How can you interact with the container? You can open a terminal in the container with:

Running images

Often we will ask you to run containers with more sophisticated options than what we saw before. Look

at the following example: (don’t try to run this, it will not do much).

root@73335ebd3355:/#

command to be run inside the container

docker ps

docker container list

docker container list -a

docker container rm ![container name 1] ![container name 2]

docker container start ![container name]

docker container stop ![container name]

docker container restart ![container name]

docker attach ![container name]

docker -H hostname.local run -dit --privileged --name joystick --network=host -v

/data:/data duckietown/rpi-duckiebot-joystick-demo:master18

shows a summary of the options we use most often in Duckietown. Below, we give some examples

Table 1 docker run Options

Short

command

Full command Explanation

-i --interactive

Keep STDIN open even if not attached, typically used together with

-t.

-t --tty

Allocate a pseudo-TTY, gives you terminal access to the container,

typically used together with -i.

-d --detach Run container in background and print container ID.

--name

Sets a name for the container. If you don’t specify one, a random

name will be generated.

-v --volume

Bind mount a volume, exposes a folder on your host as a folder in

your container. Be very careful when using this.

-p --publish

Publish a container’s port(s) to the host, necessary when you need

a port to communicate with a program in your container.

-d --device

Similar to -v but for devices. This grants the container access to a

device you specify. Be very careful when using this.

--privileged

Give extended privileges to this container. That includes access to

all devices. Be extremely careful when using this.

--rm Automatically remove the container when it exits.

-H --hostname

Specifies remote host name, for example when you want to execute

the command on your Duckiebot, not on your computer.

--help Prints information about these and other options.

Examples

Set the container name to joystick:

Mount the host’s path /home/myuser/data to /data inside the container:

Publish port 8080 in the container as 8082 on the host:

Allow the container to use the device /dev/mmcblk0:

Run a container on the Duckiebot:

Other useful commands

Pruning images

Sometimes your docker system will be clogged with images, containers, networks, etc. You can use

docker system prune to clean it up.

Keep in mind that this command will delete all containers that are not currently running and all images

not used by running containers. So be extremely careful when using it.

--name joystick

-v /home/myuser/data:/data

-p 8082:8080

-d /dev/mmcblk0

-H duckiebot.local

docker system prune

Portainer

Often, for simple operations and basic commands, one can use Portainer.

Portainer is itself a Docker container that allows you to control the Docker daemon through your web

browser. You can install it by running:

Note that Portainer comes pre-installed on your Duckiebot, so you don’t need to run the above

command to access the images and containers on your robot. You still might want to set it up for your

computer.

Hands on

Before you can do any software development in Duckietown, you need to get comfortable with Docker

and its tools.

Complete the following steps before proceeding to the next section:

1. Install Docker

2. Orientation and Setup

3. Build and run your image

4. Share images on Docker Hub

If you still feel like there is something that you are missing about Docker, you might want to spend

some time going through this guide as well.

Ask the community

If you need help with Docker basics or the use of Docker in Duckietown, join the Slack channel #help-

docker.

Duckietown Shell

This section of the book will introduce the Duckietown Shell (dts in short) and the reason behind its

creation. In this book, we will use dts commands quite often, make sure you don’t miss this section.

Brief History

The Duckietown Shell is indeed a shell. It was created in July 2018 to help Duckietown users launch

Duckietown demos on a Duckiebot. It became clear pretty soon that having a dedicated shell for

Duckietown was a game changer for the whole community. In fact, since the very beginning, the shell

had a built-in system for auto-update, which allowed developers to develop new commands or improve

old ones and deploy the changes in no time.

Duckietown has a history of using Makefiles as a way to simplify complex and operations involving

many (usually very long) bash commands. Other developers, instead, preferred bash scripts over

Makefiles. And finally, our CI system (based on Jenkins), used Jenkinsfiles to define automated jobs.

The Duckietown Shell came to the rescue and unified everything, while Makefiles, bash scripts and

Jenkinsfiles slowly started disappearing from our repositories. Today, Docker images to run on

Duckiebots, Python libraries published on PyPi and even the book you are reading right now are built

through dts.

Get Started

The Duckietown Shell is released as a Python3 package through the PyPi package store. You can

install the Duckietown Shell on your computer by running,

docker volume create portainer_data

docker run -d -p 9000:9000 --name portainer --restart always -v

/var/run/docker.sock:/var/run/docker.sock -v portainer_data:/data

portainer/portainer

pip3 install duckietown-shell

This will install the dts command. The Duckietown Shell is distribution independent, so the first time

you launch it you have to specify the distribution of Duckietown software you are working on by

following the setup prompts.

Use the command,

to list all the commands available to the chosen distribution.

You don’t really need to run the shell before you can type in your command, for example, you can

achieve the same result as above by running,

The nice thing about opening the shell before typing your command is that then you can use

the Tab key to auto-complete.

Installable commands

Some commands come not pre-installed. These are usually commands that are either very specific to

an application, thus not useful to the majority of Duckietown users, or commands that can only be used

during a short time window, like commands that let you participate to competitions periodically

organized at international AI and Robotics conferences, e.g. AIDO.

Hands on

Install the Duckietown Shell as instructed in . Make sure everything works as expected by running the

command dts update successfully.

Ask the community

If you have any questions about the Duckietown Shell, join and ask on the Duckietown Slack!

Beginner - The DTProject

Table of contents

New project

(Cmd) commands

dts commands

Note

What you will need Working environment setup

Accounts setup

Basic knowledge of Python

What you will get Learn how to use DTProjects, the most important building block

in Duckietown

What you will need Working environment setup

Accounts setup

Basic knowledge of Python

What you will get Learn how to use DTProjects, the most important building block

in Duckietown

Learn how to create a new DTProject from a template

Duckietown-compliant Docker images are built out of Duckietown Projects, in short DTProjects. A

boilerplate for the simplest DTProject is provided by the duckietown/template-basic repository.

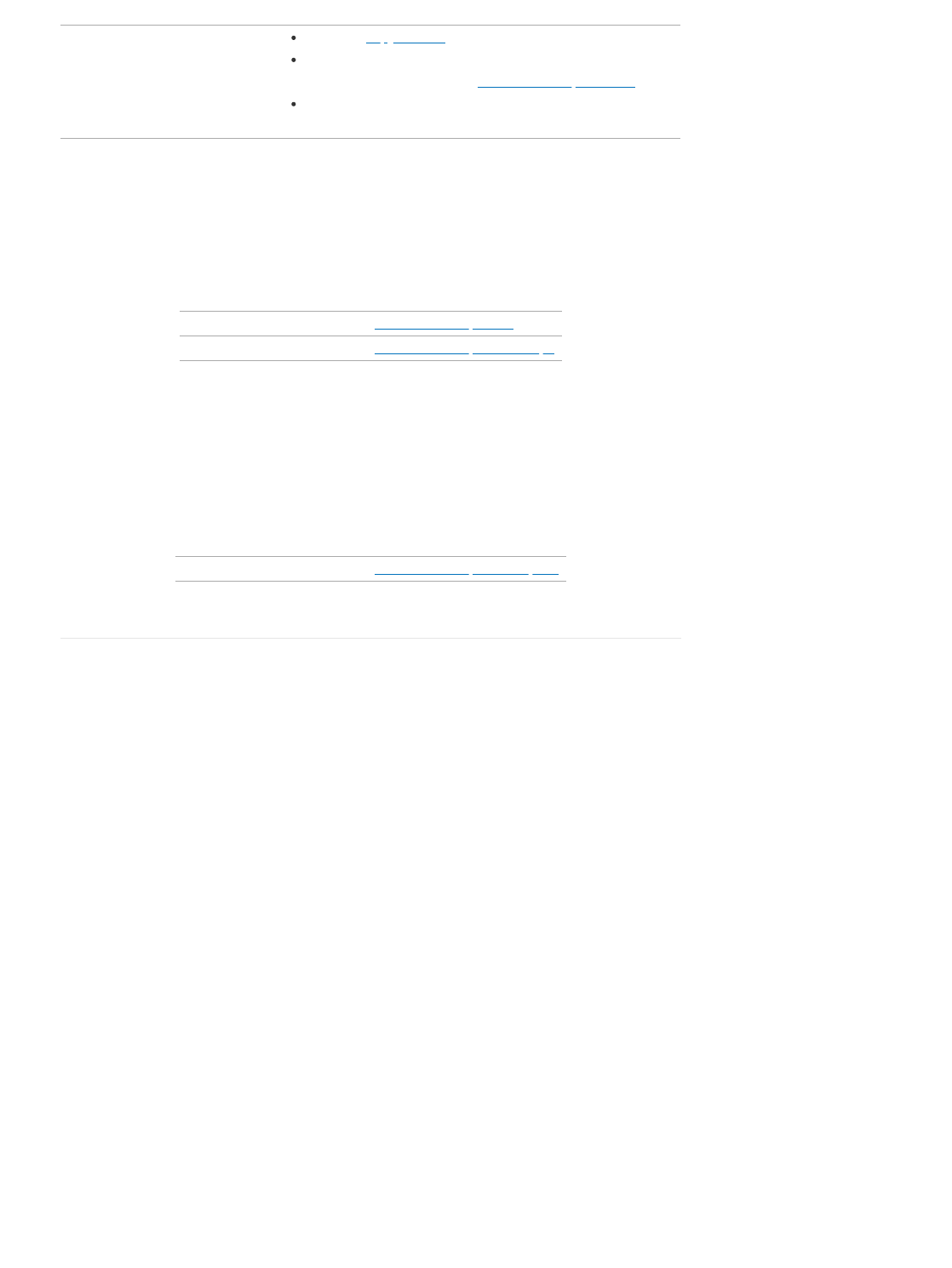

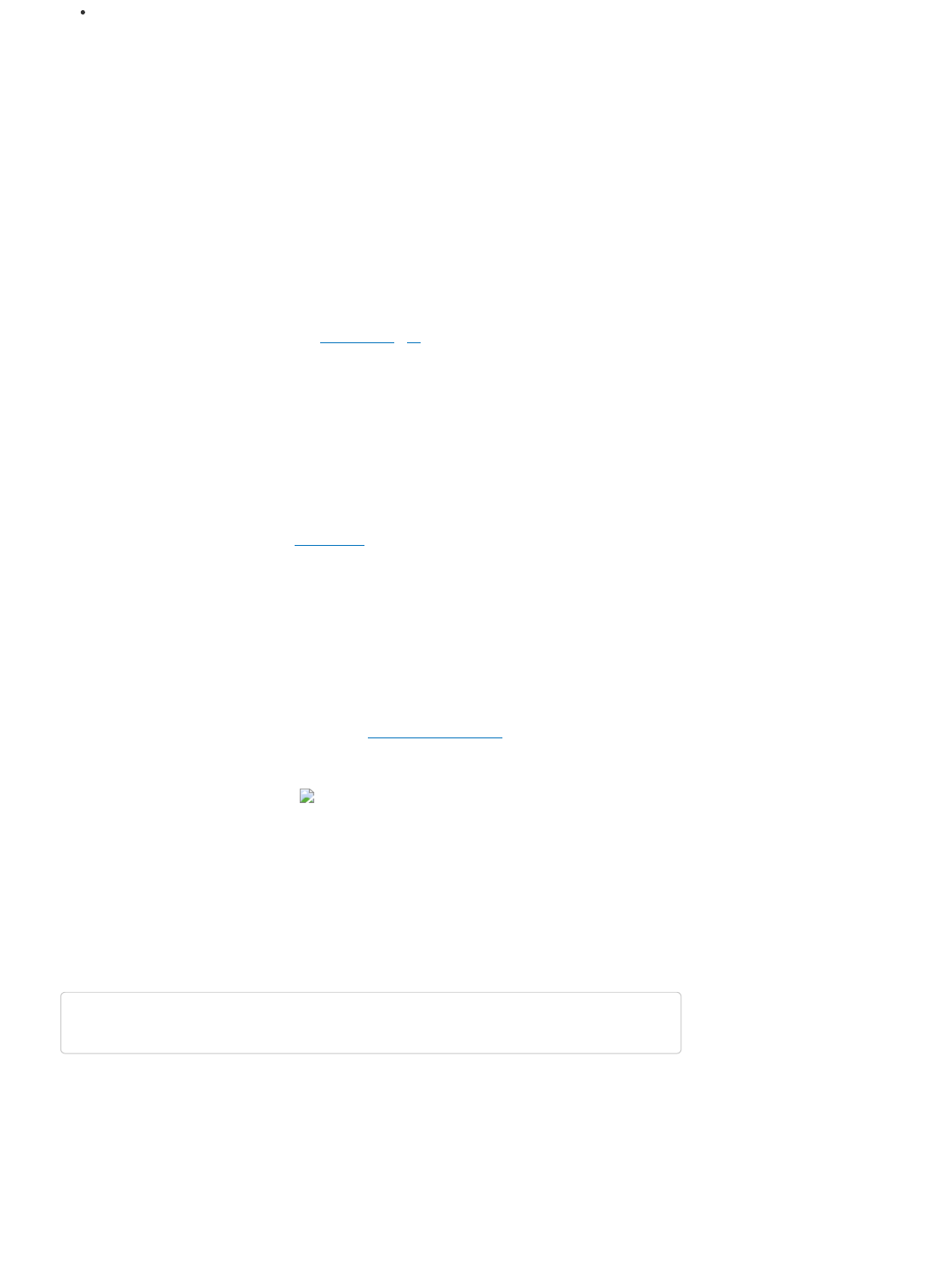

Create project from a template

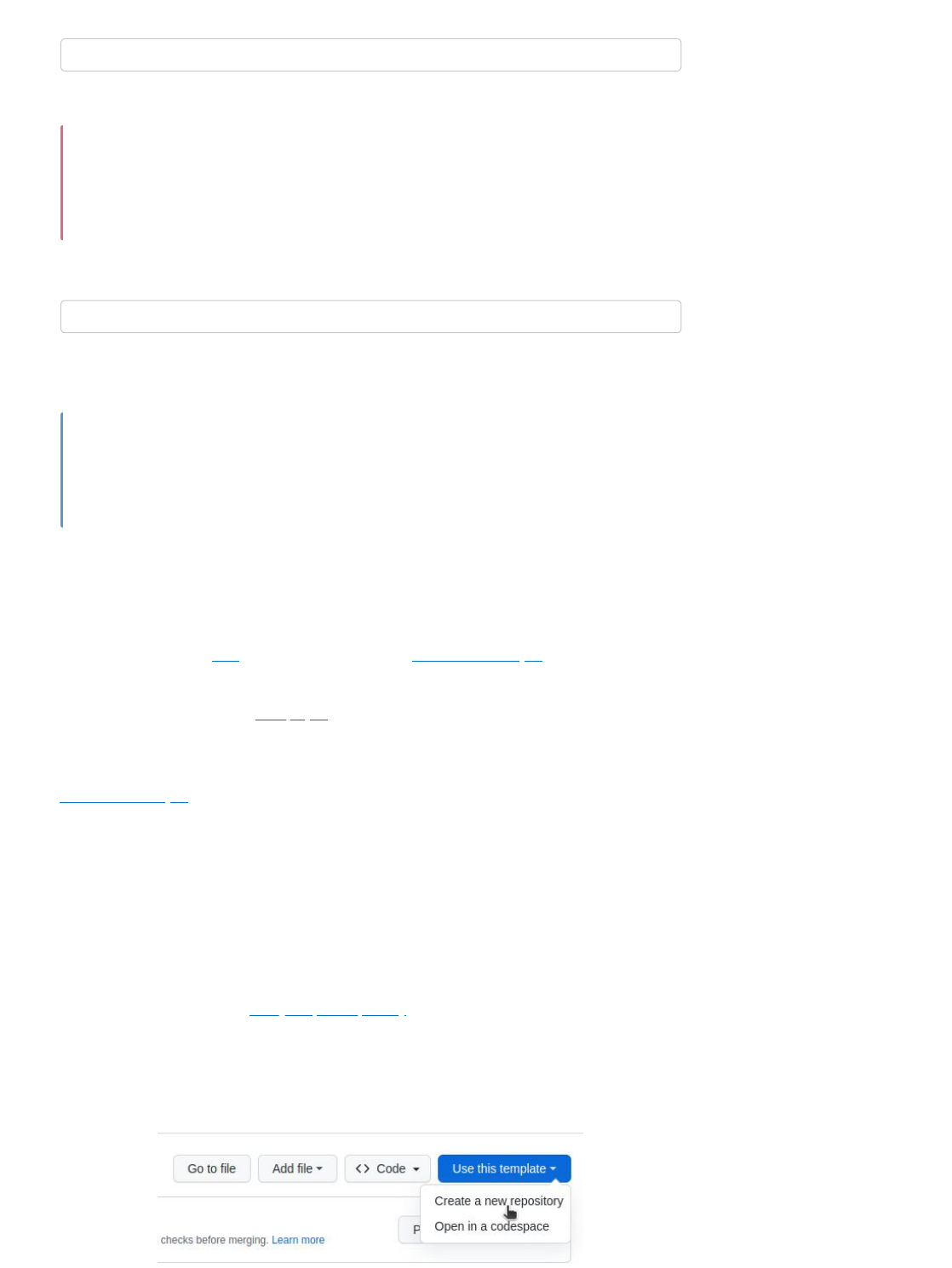

Visit the template repository page duckietown/template-basic. Click on the button that reads “Use this

template” and then choose “Create a new repository” from the dropdown menu.

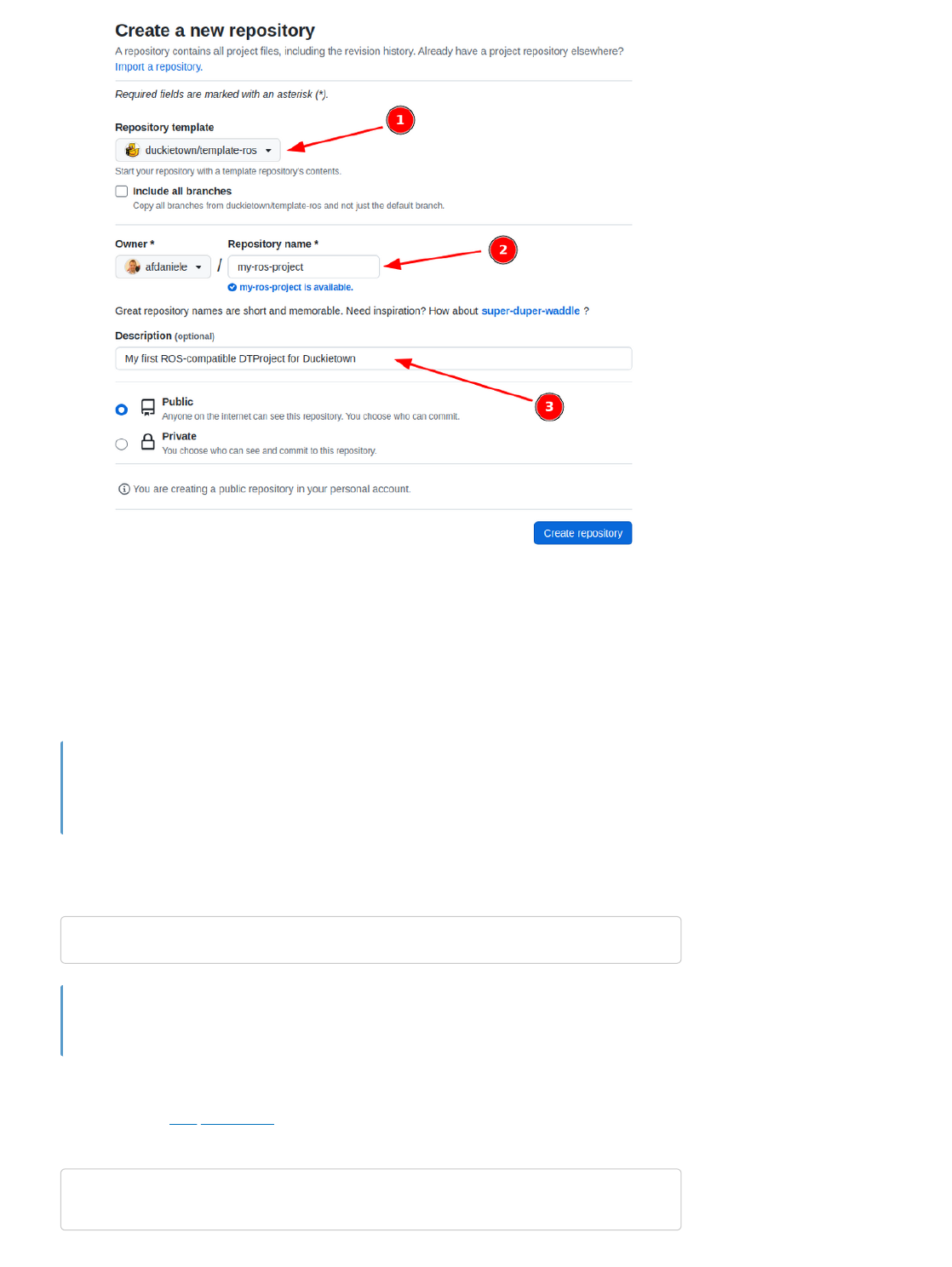

Fig. 3 Use template repository on GitHub.

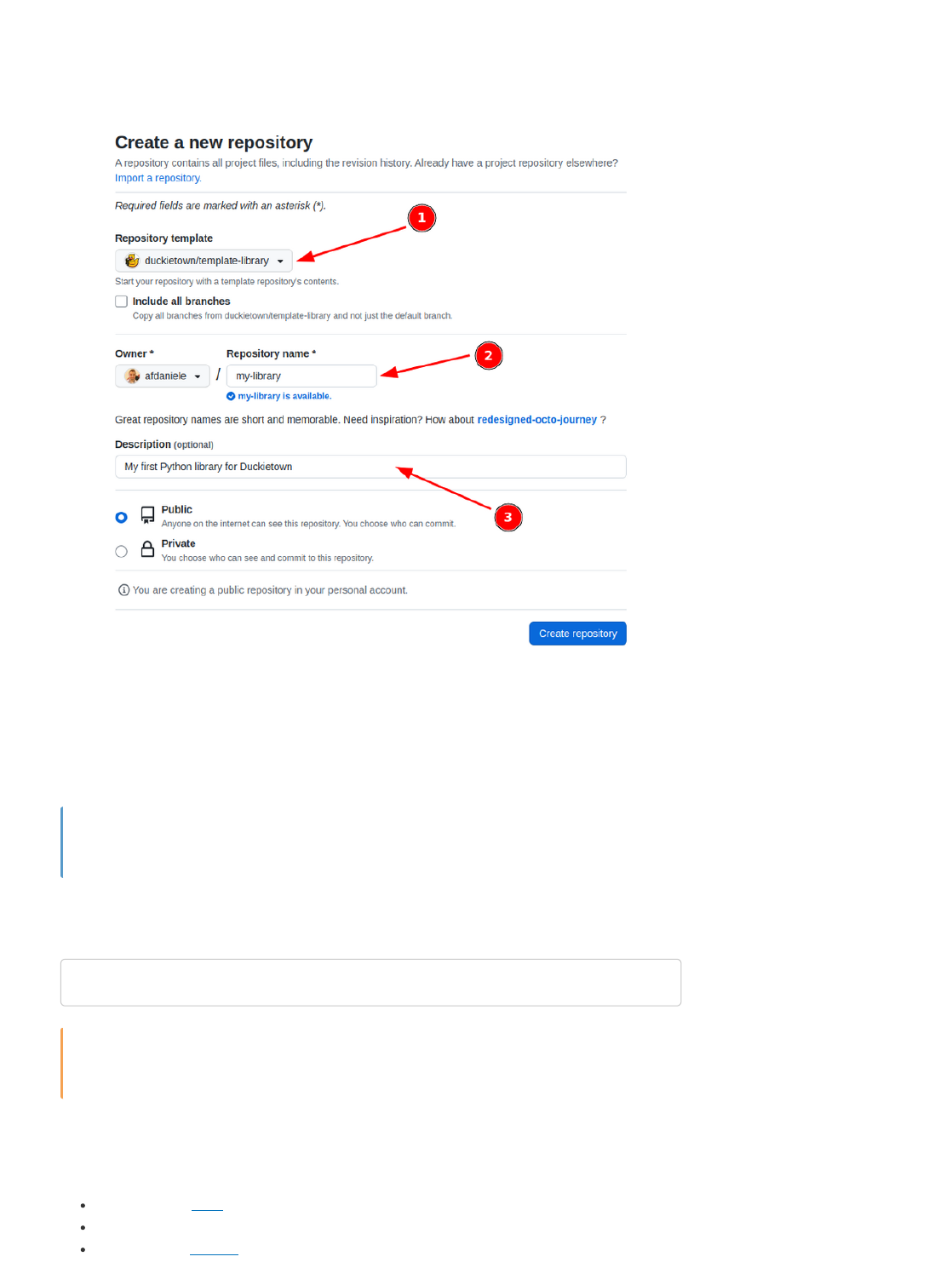

This will take you to a page that looks like the following:

Fig. 4 Creating a repository from template.

Pick a name for your repository (say my-project) and press the button Create repository from

template. Note, you can replace my-project with the name of the repository that you prefer, if you do

change it, make sure you use the right name in the instructions going forward.

This will create a new repository and copy everything from the repository template-basic to your new

repository. You can now open a terminal and clone your newly created repository.

git clone https://github.com/YOUR_NAME/my-project

cd my-project

Replace YOUR_NAME in the link above with your GitHub username.

Edit placeholders

The repository contains already everything you need to create a Duckietown-compliant Docker image

for your program. Before doing anything else, we need to head over to the Dockerfile and edit the

following lines using a text editor:

Replace the placeholders strings with, respectively,

the name of the repository (i.e., my-project);

a brief description of the functionalities of the module

your name and email address to claim the role of maintainer;

For example,

Save the changes. We can now build the image, even though there is not going to be much going on

inside it until we place our code in it.

Build the project

Now, in a terminal, move to the directory created by the git clone instruction above and run the

following command (beware that it might take some time):

The flag -f (short for --force) is needed in order to allow dts to build a module out of a non-clean

repository. A repository is not clean when there are changes that are not committed (and in fact our

changes to Dockerfile are not). This check is in place to prevent developers from forgetting to push

local changes. If the build is successful, you will see something like the following:

Note

ARG REPO_NAME="<REPO_NAME_HERE>"

ARG DESCRIPTION="<DESCRIPTION_HERE>"

ARG MAINTAINER="<YOUR_FULL_NAME> (<YOUR_EMAIL_ADDRESS>)"

ARG REPO_NAME="my-project"

ARG DESCRIPTION="My first Duckietown project"

ARG MAINTAINER="Duckie ([email protected])"

dts devel build -f

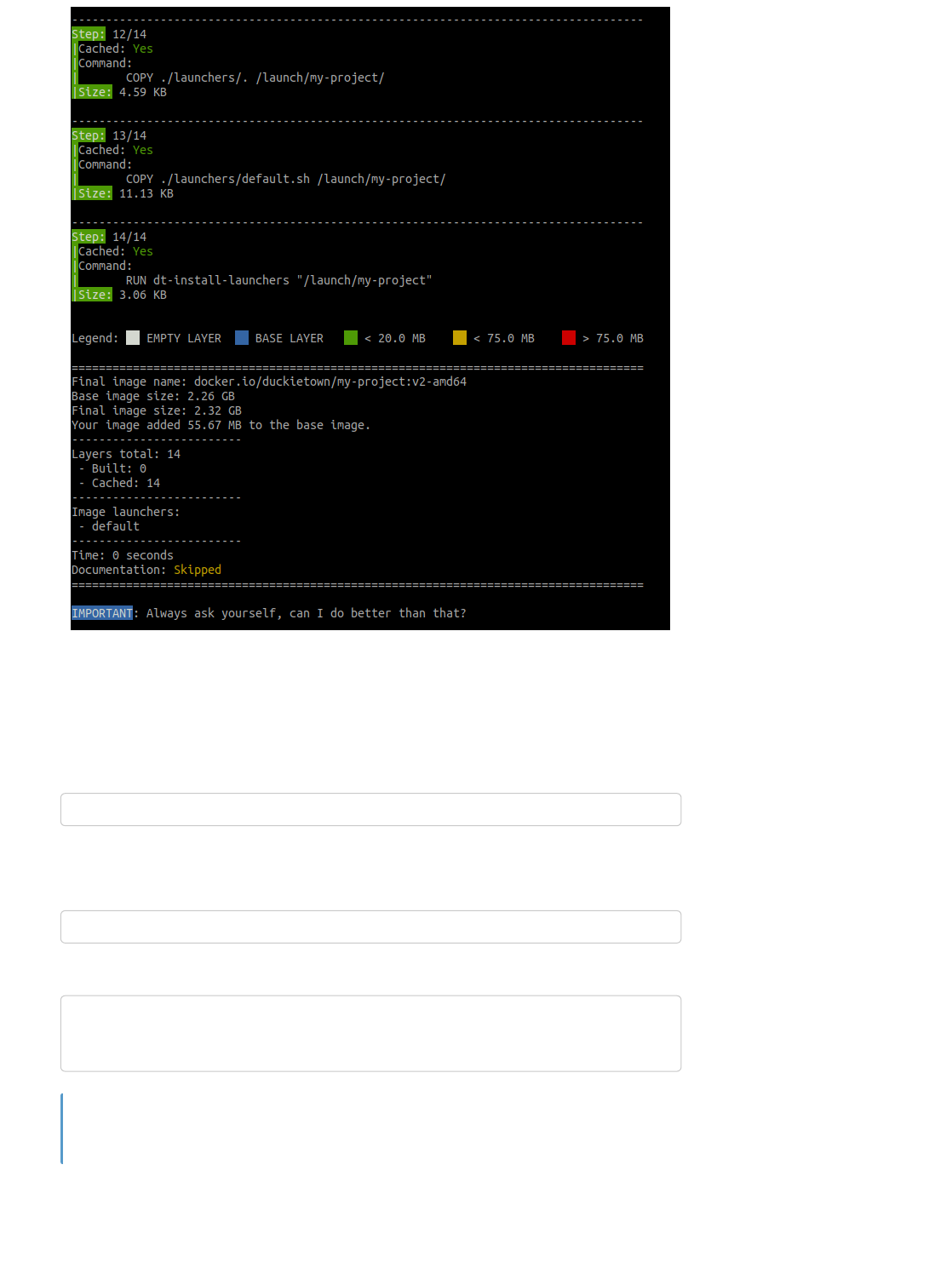

Fig. 5 Building a container through the development command in the Duckietown shell.

As discussed above, building a project produces a Docker image. This image is the compiled version

of your source project. You can find the name of the resulting image at the end of the output of the dts

devel build command. In the example above, look for the line:

Run the project

You can now run your container by executing the following command.

This will show the following message:

You just built and run your first Duckietown-compliant Docker image.

Add your code

Now that we know how to build Docker images for Duckietown, let’s build one with a simple Python

program inside.

Final image name: docker.io/duckietown/my-project:v2-amd64

dts devel run

...

==> Launching app...

This is an empty launch script. Update it to launch your application.

<== App terminated!

Congratulations

Open a terminal and go to the directory my-project created in the previous page. In Duckietown,

Python code must belong to a Python package. Python packages are placed inside the directory

packages/ you can find at the root of my-project. Let us go ahead and create a directory called

my_package inside packages/.

A Python package is simply a directory containing a special file called __init__.py. So, let us turn that

my_package into a Python package.

Now that we have a Python package, we can create a Python script in it. Use your favorite text editor

or IDE to create the file ./packages/my_package/my_script.py and place the following code inside it.

We now need to tell Docker we want this script to be the one executed when we run the command dts

devel run. In order to do so, open the file ./launchers/default.sh and replace the line

with the line

Always prepend dt-exec to the main command in ./launchers/default.sh.

Using dt-exec helps us deal with an interesting problem called “The zombie reaping problem”

(more about this in this article).

You can also create more launcher scripts. To know more about that, check out the page Launchers.

Let us now re-build the image:

and run it:

This will show the following message:

You just built and run your own Duckietown-compliant Docker image.

Define dependencies

mkdir -p ./packages/my_package

touch ./packages/my_package/__init__.py

message = "\nHello World!\n"

print(message)

echo "This is an empty launch script. Update it to launch your application."

dt-exec python3 -m "my_package.my_script"

Note

dts devel build -f

dts devel run

...

==> Launching app...

Hello World!

<== App terminated!

Congratulations

It is quite common that our programs need to import libraries, thus we need a way to install them. Since

our programs reside in Docker images, we need a way to install libraries inside the image when the

image is built.

All the project templates provided by Duckietown support two package managers out of the box:

Advanced Package Tool (apt)

Pip Installs Packages for Python3 (pip3)

List your apt packages or pip3 packages in the files dependencies-apt.txt. As for pip3

dependencies, we make a distinction between Duckietown-owned and third-party libraries. List all the

Duckietown-owned libraries you want to install in the file dependencies-py3.dt.txt and third-party

libraries in the file dependencies-py3.txt.

Dependencies files support comments (lines starting with #) and empty lines. Use them to

group dependencies together and make dependencies lists easier to read and maintain.

Running dts devel build after editing these files will rebuild the image with the new dependencies

installed.

That’s it! Now you know how to customize dependencies as well!

Exercise: Basic NumPy program

Write a program that performs the sum of two numbers using NumPy. Add numpy to the file

dependencies-py3.txt to have it installed in the Docker image.

Run on your Duckiebot

Now that we know how to package a piece of software into a Docker image for Duckietown using

DTProjects, we can go one step further and write code that will run on the robot instead of our local

computer.

This part assumes that you have a Duckiebot up and running with hostname ROBOT_NAME. Of course,

you don’t need to change the hostname to ROBOT_NAME, just replace it with your robot name in the

instructions below. You can make sure that your robot is ready by executing the command

If we can ping the robot, we are good to go.

Let us go back to our script file my_script.py we created in Add your code and change it to:

We can now modify slightly the instructions for building the image so that the image gets built directly

on the robot instead of our computer. Run the command

As you can see, we added -H ROBOT_NAME to the build command. This new option tells dts where to

build the image.

Once the image is built, we can run it on the robot by running the command

Note

ping ROBOT_NAME.local

import os

vehicle_name = os.environ['VEHICLE_NAME']

message = f"\nHello from {vehicle_name}!\n"

print(message)

dts devel build -f -H ROBOT_NAME

dts devel run -H ROBOT_NAME

If everything worked as expected, you should see the following output,

Some WARNING and INFO messages from dts and from the container’s entrypoint are

expected. Here are some examples:

From dts

WARNING:dts:Forced!

INFO:dts:Running an image for arm64v8 on aarch64.

Among entrypoint logs of the container:

INFO: The environment variable VEHICLE_NAME is not set. Using

'myduckiebot'.

You just built and run your first Duckietown-compliant and Duckiebot-compatible Docker

image. We are sure this is just the first of many!

Launchers

As we discussed earlier, the directory launchers/ contains scripts that can serve as entry scripts to the

Docker containers that are spawned out of a DTProject Docker image.

If you are familiar with Docker.

We purposefully refer to these scripts as entry scripts as not to confuse them with

entrypoints as they are defined by Docker. In fact, launcher scripts will not be directly used

as container entrypoints. A generic entrypoint is defined in all Duckietown images and is used

to configure the containers’ network, environment, etc.

The default launcher

Every project template comes with a launcher file called default.sh. This is the launcher that runs by

default inside the container when we run the command dts devel run. This launcher should be

modified and set to launch the main behavior of our project. For example, when making a DTProject

that implements autonomous lane following in Duckietown, we want our default launcher to run the

lane following pipeline.

While the default launcher is great, you will quickly realize that, for some projects, having only one

entry behavior is quite limiting. For example, let us imagine that we are working on an autonomous

lane following behavior DTProject. In this case, apart from running the full behavior (we will use the

default launcher for that), it might be useful to have entry scripts that allow us to run single components

of our multi-component lane follower pipeline, say, so that we can test single components one at a

time.

In some cases, we will use additional launchers as test scripts. In other cases, it might make sense to

leave the default launcher untouched and only implement additional launchers. This might be useful,

for example, when a DTProject implements a collection of sensor calibrations. In this case, we might

...

==> Launching app...

Hello from ROBOT_NAME!

<== App terminated!

Note

Congratulations

Attention

want to leave the default launcher untouched as there is no “default calibration”, and then have the

additional launchers camera-intrinsic-calibration.sh, camera-extrinsic-calibration.sh, etc.

Add new launcher

In order to add a new launcher, you can simply make a new file inside the launchers/ directory. The

only rule is that such file must either have extension .sh, or, have a shebang declaration on its first line.

For example, we can make a launcher out of a Python script file launchers/my-launcher.py with the

content,

The output from the command dts devel build shows the list of launchers installed in the

image we just built. For example,

Launchers inside the container

Launchers inside the container appear as shell commands named as dt-launcher-<LAUNCHER_NAME>.

For example, the launcher file launchers/my-launcher.sh will be available as the shell command

dt-launcher-my-launcher.

Project Templates

While DTProjects are open to all sorts of customizations to accommodate for virtually any need,

Duckietown provides a set of templated projects that cover the most common use cases. For each

project template we provide a templated repository.

Coding project templates

These project templates are designed for projects implementing robot behaviors (e.g., lane following),

back-end systems (e.g., REST APIs), etc.

Table 2 Coding project templates

Name Features Link

basic

Ubuntu 20.04 base image

Support for Python

packages in packages/

duckietown/template-basic

ros

Same as basic

Support for ROS

Support for catkin

packages in packages/

duckietown/template-ros

core

Same as ros

Duckietown autonomous

driving modules baked in

duckietown/template-core

Documentation project templates

These project templates are designed for projects implementing documentation books and manuals

such as the one you are looking at right now.

#!/usr/bin/env python3

message = "This is a Python launcher"

print(message)

Note

...

-------------------------

Image launchers:

- default

- test-agent

- test-lens-distortion

-------------------------

Table 3 Documentation project templates

Name Features Link

book

Based on Jupyter Book

Compiles into HTML and

PDF

Easy cross-reference with

Duckietown books library

duckietown/template-book

Duckietown Learning Experiences (LXs) project templates

These project templates are designed for the development of web-based dashboards based on

\compose\. A project based on this template is the robot dashboard.

Table 4 Duckietown Learning Experiences (LXs) project

templates

Name Link

lx duckietown/template-lx

lx-recipe duckietown/template-lx-recipe

Dashboard project templates

These project templates are designed for the development of web-based dashboards based on

\compose\. A project based on this template is the robot dashboard.

Table 5 Dashboard project templates

Name Link

compose duckietown/template-compose

In Duckietown, everything runs in Docker containers. All you need in order to run a piece of software in

Duckietown is a Duckietown-compliant Docker image with your software in it.

Duckietown-compliant Docker images are built out of Duckietown Projects, in short DTProjects. A

DTProject is a git repository with an agreed-upon structure that our automated tools can parse.

Everybody can use existing DTProjects and everybody can create new ones and distribute them freely

over the internet. Agreeing on a structure for our code is crucial for the creation of a community of

developers who can easily share their solutions.

High level robot behaviors in Duckietown, such as autonomous driving (in Duckiebots) or autonomous

flight (in Duckiedrones), are implemented collectively by a set of DTProjects. Breaking down a complex

problem into smaller problems that are tackled independently is very common in software development

and is inspired by a military strategy called “divide and conquer” (latin: divide et impera) commonly

used by the Roman Empire.

By implementing complex behaviors as the union of smaller and simpler DTProjects, we can drastically

improve the usability, mantainability, and modularity of our software solutions. Moreover,

DTProjects can be exchanged, shared, extended, and improved with and by the community.

Each DTProject produces a Docker Image

As we will see in the next sections, a DTProject needs to be built into its corresponding executable

Docker image before it can be executed.

Understanding the pros and cons of forcing code to only run inside Docker containers right now is

crucial.

Bad news first! The biggest downside of using Docker to isolate the execution of our code is that by

doing so, we are wrapping our source code inside a Docker image. This makes it harder for us to do

development, since our code will not be easily accessible through our local file system. This is what

scares/frustrates people away from Docker the most. Keep it in mind, if it happens to you, you are not

the only one. The Duckietown development workflow explained in this book aims, among other things,

at reducing the effect of this code isolation. We will get back to this topic later in the book.

As for the good news, i.e., why using Docker to isolate our code makes sense and our life easier, we

could write a book about it, but it is better if we discover those benefits as we go.

Structure of a DTProject

DTProjects have an agreed-upon files structure with known locations for source code, configuration

files, dependencies lists, etc.

Meta-files

.dtproject: Signals that this directory contains a Duckietown Project;

.gitignore: List of files and directories ignore by git;

.dockerignore: List of files and directories ignore by docker;

.bumpversion.cfg: Configuration for bumpversion, used to perform semantic versioning on the

project;

Docker

Dockerfile: Dockerfile building the project’s Docker image;

configurations.yaml: Collection of Docker container configurations that can be used on this

project;

Source code

packages/: This directory can contain both Python and Catkin packages;

launchers/: Scripts that can be used as entry scripts inside the project’s Docker container;

Dependencies

dependencies-apt.txt: List of dependency packages that can be installed via apt;

dependencies-py3.dt.txt: List of Duckietown-owned dependency packages that can be

installed via pip;

dependencies-py3.txt: List of third-party dependency packages that can be installed via pip;

Assets and Documentation

assets/: Store static assets in this directory. For example, configuration files you need to bake

into the image;

docs/: Contains a book project that can be used to write documentation about this project;

html/: Hosts the HTML of the compiled documentation in docs/;

Other

LICENSE.pdf: The Duckietown Software Terms of Use;

README.md: Brief description of the DTProject;

Project Templates

While a DTProject exposes a lot of parameters that the final user can tune, e.g., base Docker image,

support for ROS, etc., it helps to have a set of predefined presets covering the most common use

cases. We call these Project Templates. Project templates are stored on GitHub as Template

Repositories. These are of a special kind of repositories and their main characteristic is that one can

use them to initialize new repositories.

The simplest module template is called basic and its template repository is duckietown/template-basic.

Since understanding the differences between different templates is outside the scope of this section,

we can use any template for the remainder of this section, we suggest using the one above. For a list

of predefined project templates, check out the Project Templates

In the next sections of this chapter we will learn how to customize, build, and run our own DTProjects

both locally and on a Duckietown robot.

Beginner - Use ROS

Table of contents

New ROS DTProject

Create project from a template

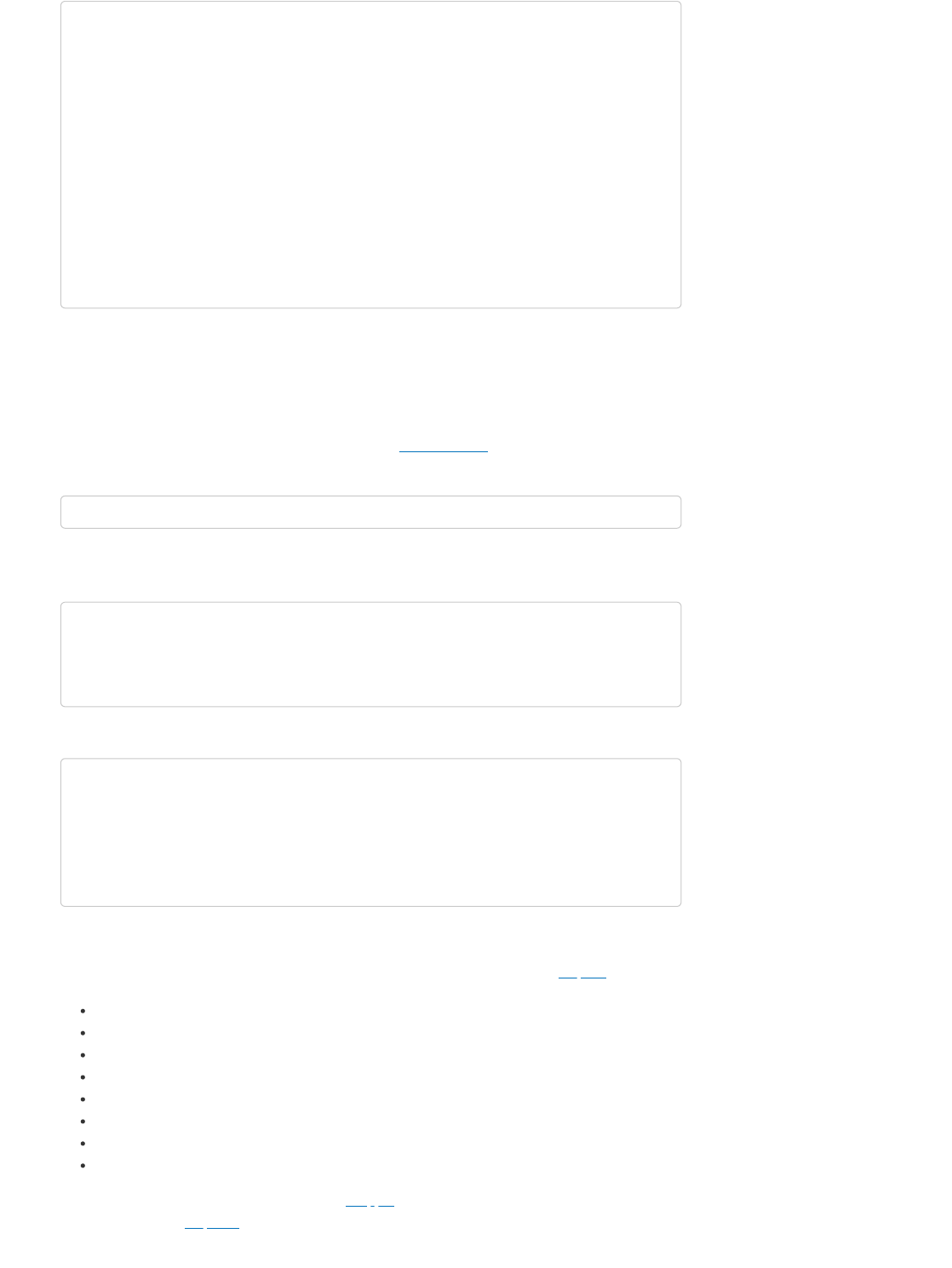

Visit the template repository page duckietown/template-ros. Click on the button that reads “Use this

template” and then choose “Create a new repository” from the dropdown menu.

Fig. 6 Use template repository on GitHub.

This will take you to a page that looks like the following:

What you will need A computer set up with the Duckietown software requirements

An initialized Duckiebot

Completed tutorial on DTProject

What you will get A working knowledge of ROS development in Duckietown

A custom ROS node with a publisher and a subscriber running

on your Duckiebot

What you will need Working environment setup

Accounts setup

What you will get Learn how to create a new ROS-compatible DTProject from a

template

Fig. 7 Creating a repository from template.

Make sure the selected template is duckietown/template-ros, pick a name for our repository (e.g.,

my-ros-project), and add a description for the new repository.

Click on the button Create repository.

You can replace my-ros-project with the name of the repository that you prefer. If you do

change it, make sure you use the right name in the instructions going forward.

This will create a new repository starting from the content of the template template-ros. You can now

open a terminal and clone your newly created repository.

Replace YOUR_NAME in the link above with your GitHub username.

Edit placeholders

As we learned in Edit placeholders, we now need to edit the placeholders left by the template. Head

over to the Dockerfile and edit the following lines using any text editor:

Replace the placeholders strings with, respectively,

Note

git clone https://github.com/YOUR_NAME/my-ros-project

cd my-ros-project

Note

ARG REPO_NAME="<REPO_NAME_HERE>"

ARG DESCRIPTION="<DESCRIPTION_HERE>"

ARG MAINTAINER="<YOUR_FULL_NAME> (<YOUR_EMAIL_ADDRESS>)"

the name of the repository (i.e., my-ros-project);

a brief description of the functionalities implemented in this project;

your name and email address to claim the role of maintainer;

Save the changes. We can now compile this project into a Docker image.

Build the project

Exactly as in Build the project, we can now move to the project root and run the following command to

build our project (beware that it might take some time):

Again, building a project produces a Docker image. This image is the compiled version of your source

project. You can find the name of the resulting image at the end of the output of the dts devel build

command. In this case, look for the line:

Run the project

Again, as in Run the project, we can run our project by executing the command,

This will show the following message:

You just built and run your first ROS-based Duckietown-compliant Docker image.

Catkin Packages

ROS uses the catkin build system to organize and build its software. If you are not familiar with the

catkin build system, you can learn about it by following the official tutorials.

In a nutshell, catkin organizes entire projects in the so-called catkin workspaces. A catkin workspace is

nothing more than a directory containing a bunch of software modules called catkin packages. Each

software module can contain a set of executables (e.g., binaries, script files) called ROS nodes. ROS

nodes interact with one another using two of the most common communication patterns, called

publish-subscribe and request-reply.

ROS implements the publish-subscribe pattern using ROS Publishers and ROS Subscribers, and

the request-reply pattern using ROS Services. More on these later.

Let us now take a step back and review catkin workspaces, packages, and nodes more in details.

Catkin workspace

The directory packages/ you find at the root of a DTProject is a catkin workspace.

dts devel build -f

Final image name: duckietown/my-ros-project:v2-amd64

dts devel run

...

==> Launching app...

This is an empty launch script. Update it to launch your application.

<== App terminated!

Congratulations

What you will need New ROS DTProject as described here

What you will get Learn how to create a new catkin package inside a DTProject

Create a new Catkin package

Open a terminal at the root of the DTProject my-ros-project created earlier. Again, Catkin packages

are directories inside the directory packages/ of my-ros-project. Let us go ahead and create a new

directory called my_package inside packages/.

A Catkin package (also known as a ROS package) is simply a directory containing two special files,

package.xml and CMakeLists.txt. So, let us turn the my_package folder into a ROS package by

creating these two files.

Create the file package.xml inside my_package using your favorite text editor and place/adjust the

following content inside it:

Replace YOUR_FULL_NAME with your first and last name and [email protected] with your email

address.

Now, create the file CMakeLists.txt inside my_package using your favorite text editor and place/adjust

the following content inside it:

We now have a catkin package inside a catkin workspace in a ROS-capable DTProject. We will now

add our code by adding ROS nodes to our catkin package.

ROS Publisher

The most common communication pattern in Robotics is known as publish-subscribe. ROS

implements the publish-subscribe pattern using ROS Publishers and ROS Subscribers. In this

section, we will learn to create a ROS Publisher.

In reality, packages/ is more of a sub-workspace, as it is internally joined with other

packages/ directories from other projects (the ancestor projects) to form a full catkin

workspace.

Advanced: the real story behind the packages directory

mkdir -p ./packages/my_package

<package>

<name>my_package</name>

<version>0.0.1</version>

<description>

My first Catkin package in Duckietown.

</description>

<maintainer email="[email protected]">YOUR_FULL_NAME</maintainer>

<license>None</license>

<buildtool_depend>catkin</buildtool_depend>

</package>

cmake_minimum_required(VERSION 2.8.3)

project(my_package)

find_package(catkin REQUIRED COMPONENTS

rospy

)

catkin_package()

What you will need A Duckietown robot turned ON and visible on dts fleet

discover

What you will get Learn how to create a new ROS Node publishing messages

using a ROS Publisher

The general concept is simple, a publisher has the job of publishing messages from a ROS node into

the ROS network for other nodes to receive (using ROS Subscribers).

Create Publisher ROS Node

We will see how to write a simple ROS program with Python, but any language supported by ROS

should do it. In Create a new Catkin package, we learned how to make a new Catkin package, we will

now populate that package with a ROS node hosting a ROS Publisher.

Nodes are placed inside the directory src/ of a Catkin package. Let us go ahead and create the

directory src inside my_package. We can do so by running the following command from the root of our

DTProject.

We now use our favorite text editor to create the file my_publisher_node.py inside the src/ directory

we just created and place the following code in it:

Using the super class DTROS provided by the Python module duckietown.dtros is not

mandatory but it is highly suggested as it provides a lot of useful features that plain ROS does

not. More on these later.

We now need to the tell our file system that we want our file my_publisher_node.py be treated as an

executable file. We do so by running the following command from the root of our DTProject:

Define launcher

As we discussed above, everything in Duckietown runs inside Docker containers. This means that we

also need to tell Docker what to run when the container is started. In this case, we want our new ROS

publisher node to run.

mkdir -p ./packages/my_package/src

#!/usr/bin/env python3

import os

import rospy

from std_msgs.msg import String

from duckietown.dtros import DTROS, NodeType

class MyPublisherNode(DTROS):

def __init__(self, node_name):

# initialize the DTROS parent class

super(MyPublisherNode, self).__init__(node_name=node_name,

node_type=NodeType.GENERIC)

# static parameters

self._vehicle_name = os.environ['VEHICLE_NAME']

# construct publisher

self._publisher = rospy.Publisher('chatter', String, queue_size=10)

def run(self):

# publish message every 1 second (1 Hz)

rate = rospy.Rate(1)

message = f"Hello from {self._vehicle_name}!"

while not rospy.is_shutdown():

rospy.loginfo("Publishing message: '%s'" % message)

self._publisher.publish(message)

rate.sleep()

if __name__ == '__main__':

# create the node

node = MyPublisherNode(node_name='my_publisher_node')

# run node

node.run()

# keep the process from terminating

rospy.spin()

Note

chmod +x ./packages/my_package/src/my_publisher_node.py

Each DTProject compiles into a single Docker image, but we can declare multiple start “behaviors” for

the same project/image so that the same project can serve multiple (though related) purposes. As we

learned in Launchers, we can use launchers to accomplish this. As we learned in Add new launcher,

we create a new launcher to allow for this new start behavior.

In order to do so, we create the file ./launchers/my-publisher.sh and add the following content,

Launch the Publisher node

This part assumes that you have a Duckiebot up and running with a known hostname, e.g.,

ROBOT_NAME. Let us make sure that our robot is ready by executing the command,

If you can ping the robot, you are good to go.

Let us now re-compile our project using the command

and run it using the newly defined launcher (we use the flag -L/--launcher to achieve this):

This will show the following message:

You just built and run your first Duckietown-compliant and Duckiebot-compatible ROS

publisher.

If you want to stop it, just use Ctrl+C.

ROS Subscriber

#!/bin/bash

source /environment.sh

# initialize launch file

dt-launchfile-init

# launch publisher

rosrun my_package my_publisher_node.py

# wait for app to end

dt-launchfile-join

ping ROBOT_NAME.local

dts devel build -H ROBOT_NAME -f

dts devel run -H ROBOT_NAME -L my-publisher

...

==> Launching app...

[INFO] [1693000564.020676]: [/my_publisher_node] Initializing...

[INFO] [1693000564.028260]: [/my_publisher_node] Node starting with switch=True

[INFO] [1693000564.029052]: [/my_publisher_node] Found 0 user configuration files in

'/data/config/nodes/generic'

[INFO] [1693000564.029608]: [/my_publisher_node] Found 0 user configuration files in

'/data/config/nodes/my_publisher_node'

[INFO] [1693000564.034693]: [/my_publisher_node] Health status changed [STARTING] ->

[STARTED]

[INFO] [1693000564.035819]: Publishing message: 'Hello from vbot!'

[INFO] [1693000565.036489]: Publishing message: 'Hello from vbot!'

[INFO] [1693000566.036378]: Publishing message: 'Hello from vbot!'

...

Congratulations

What you will need A Duckietown robot turned ON and visible on dts fleet

discover

What you will get Learn how to create a new ROS Node receiving messages

The most common communication pattern in Robotics is known as publish-subscribe. ROS

implements the publish-subscribe pattern using ROS Publishers and ROS Subscribers. In this

section, we will learn to create a ROS Subscriber.

The general concept is simple: a subscriber has the job of listening for messages about a specific topic

that are published by other ROS nodes (using ROS Publishers) over a ROS network.

Create Subscriber ROS Node

In Create a new Catkin package, we learned how to make a new Catkin package, we will now populate

that package with a ROS node hosting a ROS Subscriber.

Again, nodes are placed inside the directory src/ of a Catkin package. If we followed the tutorial

Create Publisher ROS Node we should already have this directory.

We now use our favorite text editor to create the file my_subscriber_node.py inside the src/ directory

we just created and place the following code in it:

Using the super class DTROS provided by the Python module duckietown.dtros is not

mandatory but it is highly suggested as it provides a lot of useful features that plain ROS does

not. More on these later.

We now need to the tell our file system that we want our file my_subscriber_node.py be treated as an

executable file. We do so by running the following command from the root of our DTProject:

Define launcher

We now create a new launcher file ./launchers/my-subscriber.sh with the following content inside,

using a ROS Subscriber

#!/usr/bin/env python3

import rospy

from duckietown.dtros import DTROS, NodeType

from std_msgs.msg import String

class MySubscriberNode(DTROS):

def __init__(self, node_name):

# initialize the DTROS parent class

super(MySubscriberNode, self).__init__(node_name=node_name,

node_type=NodeType.GENERIC)

# construct subscriber

self.sub = rospy.Subscriber('chatter', String, self.callback)

def callback(self, data):

rospy.loginfo("I heard '%s'", data.data)

if __name__ == '__main__':

# create the node

node = MySubscriberNode(node_name='my_subscriber_node')

# keep spinning

rospy.spin()

Note

chmod +x ./packages/my_package/src/my_subscriber_node.py

Launch the Subscriber node

This part assumes that you have a Duckiebot up and running with a known hostname, e.g.,

ROBOT_NAME. Let us make sure that our robot is ready by executing the command,

If you can ping the robot, you are good to go.

Let us now re-compile our project using the command

and run it using the newly defined launcher (we use the flag -L/--launcher to achieve this):

This will show the following messages before hanging,

This is because the ROS Subscriber is now waiting for messages to come in. Let us open a new

terminal at the root of the project and launch an instance of the publisher we built previously. We can

do so by running the following command,

We need to add the option -n publisher to tell dts to allow multiple instances of the same

project to run simultaneously.

You should notice that messages will start to appear on the subscriber side. The expected output is the

following,

#!/bin/bash

source /environment.sh

# initialize launch file

dt-launchfile-init

# launch subscriber

rosrun my_package my_subscriber_node.py

# wait for app to end

dt-launchfile-join

ping ROBOT_NAME.local

dts devel build -H ROBOT_NAME -f

dts devel run -H ROBOT_NAME -L my-subscriber

...

==> Launching app...

[INFO] [1693000997.289437]: [/my_subscriber_node] Initializing...

[INFO] [1693000997.296816]: [/my_subscriber_node] Node starting with switch=True

[INFO] [1693000997.297660]: [/my_subscriber_node] Found 0 user configuration files

in '/data/config/nodes/generic'

[INFO] [1693000997.298273]: [/my_subscriber_node] Found 0 user configuration files

in '/data/config/nodes/my_subscriber_node'

[INFO] [1693000997.303460]: [/my_subscriber_node] Health status changed [STARTING] -

> [STARTED]

...

dts devel run -H ROBOT_NAME -L my-publisher -n publisher

Note

You just built and run your first Duckietown-compliant and Duckiebot-compatible ROS

subscriber.

If you want to stop it, just use Ctrl+C.

Faster Development Workflow

In this section we will learn some tricks that enable a much faster development workflow in dts.

Run locally

As we have seen so far, we can add new code to a DTProject or make a change to existing code and

then build and run either locally or directly on a Duckiebot. While working with ROS, we only have seen

examples in which all the nodes were run on the Duckiebot. This is because ROS sets up all the nodes

to defaultly look for a ROS network on the local machine, and given that our ROS network originates

on the Duckiebot, we can leverage the default configuration of ROS by running the nodes directly on

the Duckiebot. Unfortunately, building and running on the Duckiebot is not the best option when it

comes to speed, though having a responsive development workflow is crucial in software development.

There are two major issues with the current workflow,

1. our source code always resides on our local computer, so Docker needs to transfer it over to the

Duckiebot for the image to be built;

2. the Duckiebot’s on-board computer is too slow to be used as a development testbed (while it is

fine for final deployments);

Ideally, we would like to be able to build and run ROS nodes on our local computer in a way that is

transparent to all other ROS nodes. This can be done very easily with dts, and we will now see how.

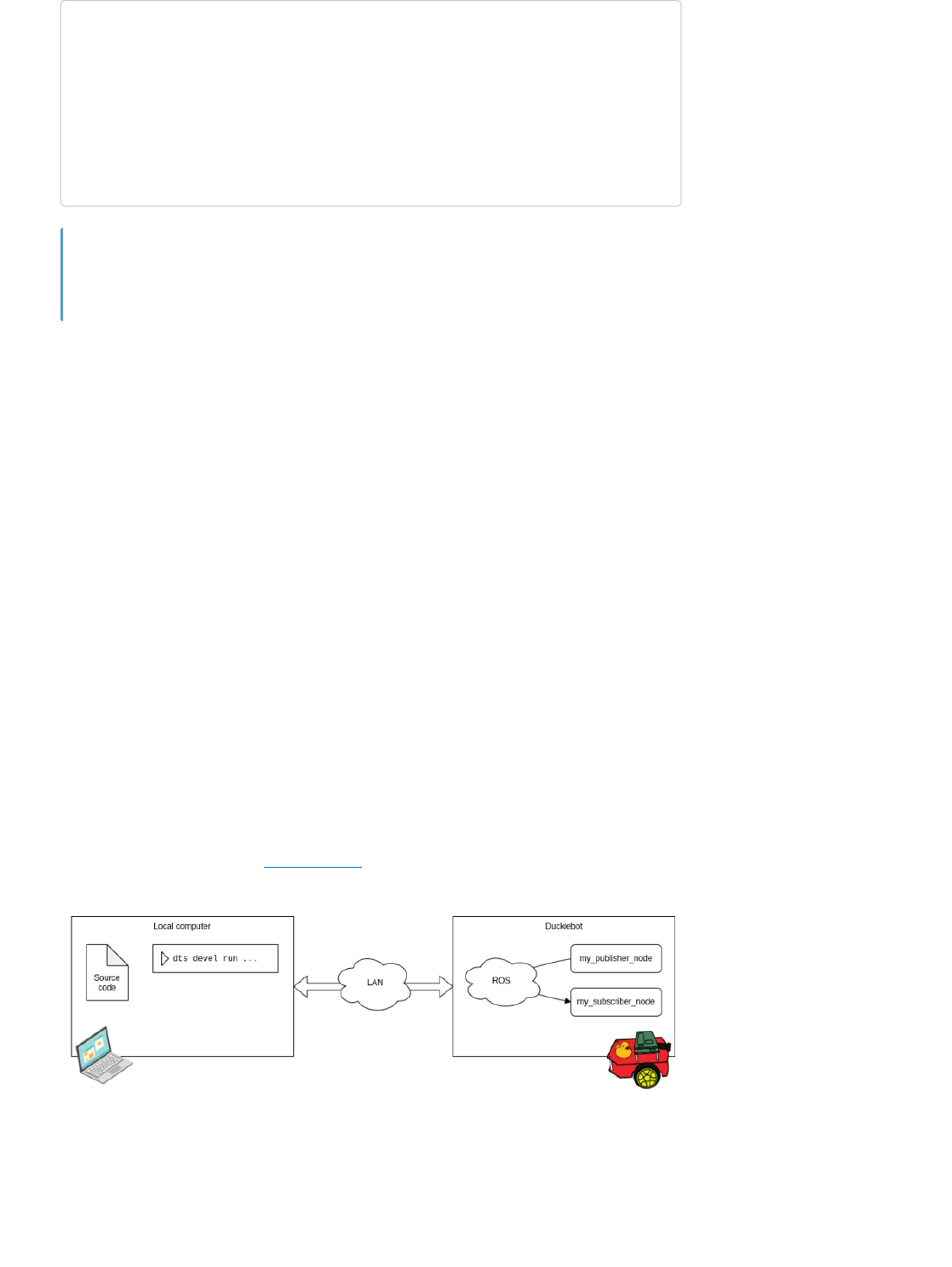

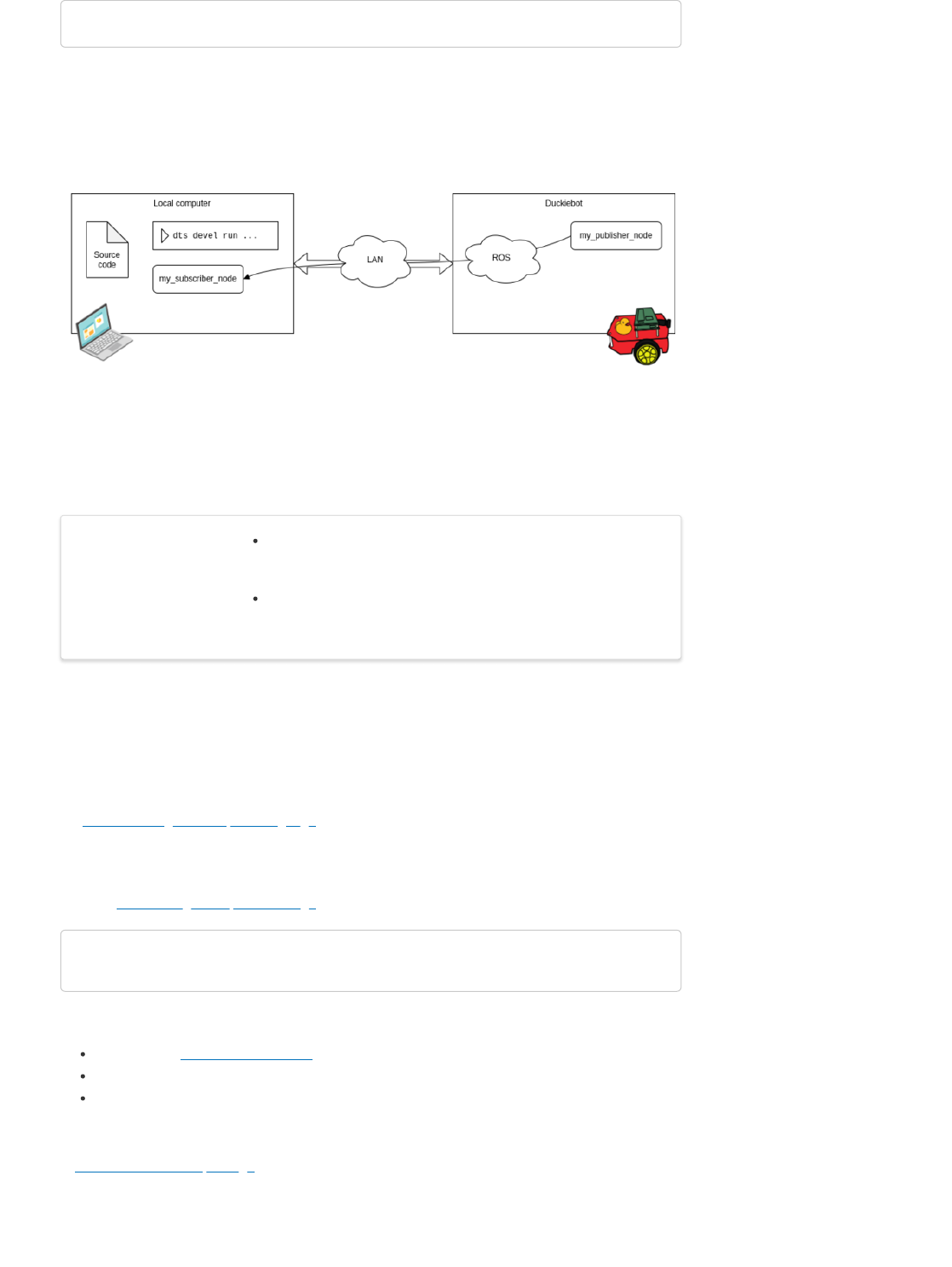

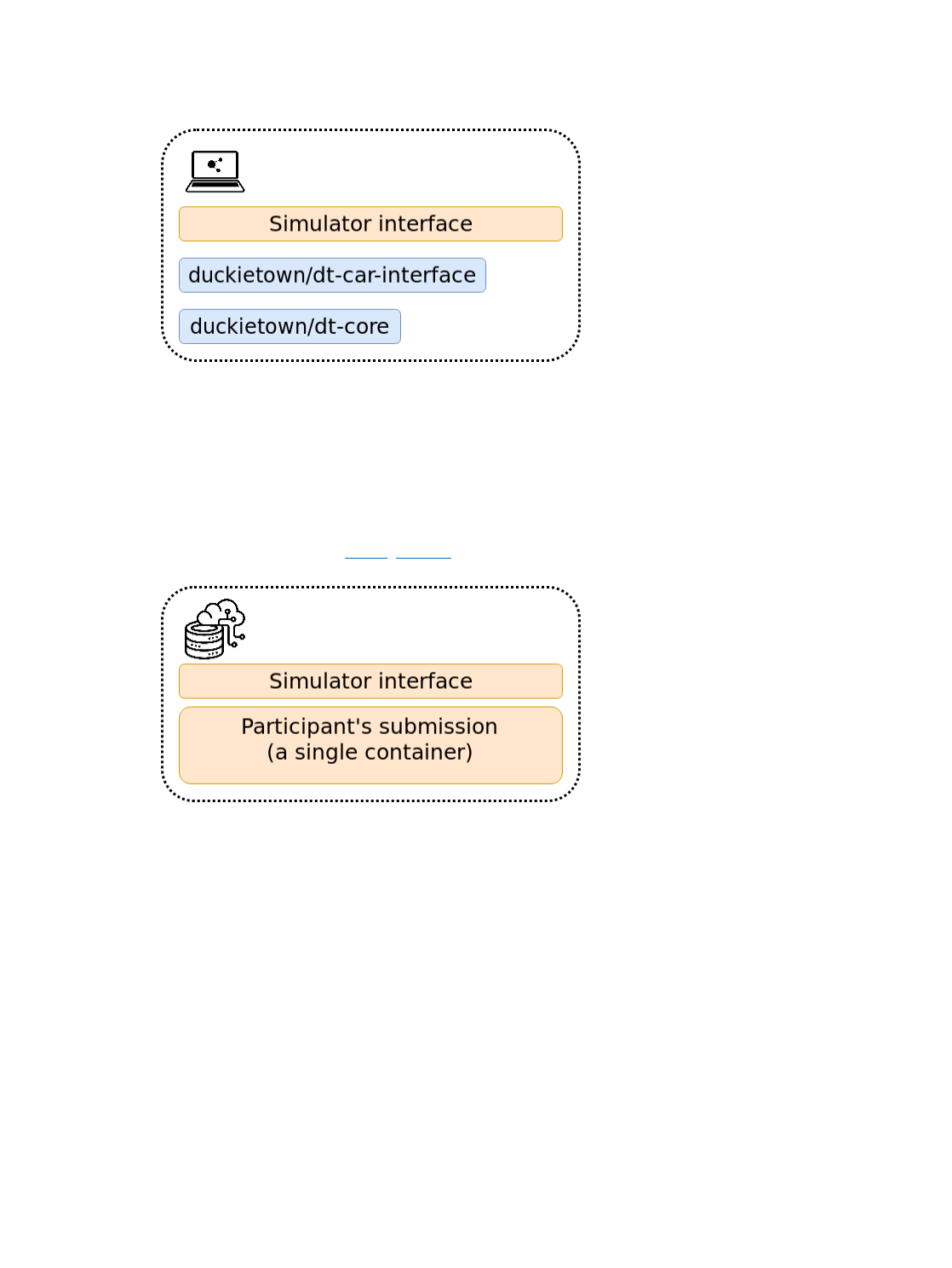

Let us go back to the example in ROS Subscriber. A block diagram showing the ROS nodes and their

location in the network would be the following,

Fig. 8 Block diagram for a Pub-Sub setup with both nodes running on the Duckiebot.

Let us now keep everything as is for the Publisher and slightly change the commands we use to build

and run the Subscriber. In particular, we use the following commands instead,

...

==> Launching app...

[INFO] [1693000997.289437]: [/my_subscriber_node] Initializing...

[INFO] [1693000997.296816]: [/my_subscriber_node] Node starting with switch=True

[INFO] [1693000997.297660]: [/my_subscriber_node] Found 0 user configuration files

in '/data/config/nodes/generic'

[INFO] [1693000997.298273]: [/my_subscriber_node] Found 0 user configuration files

in '/data/config/nodes/my_subscriber_node'

[INFO] [1693000997.303460]: [/my_subscriber_node] Health status changed [STARTING] -

> [STARTED]

[INFO] [1693001092.577549]: I heard 'Hello from ROBOT_NAME!'

[INFO] [1693001093.557725]: I heard 'Hello from ROBOT_NAME!'

...

Congratulations

We are now telling dts to build the project locally (we removed -H ROBOT_NAME from the build

command). We are also telling dts to run the subscriber node locally (we removed -H ROBOT_NAME

from the run command) but to connect it to the ROS network of the Duckiebot (using the --ros/-R

ROBOT_NAME option on the run command). A block diagram showing the new configuration of ROS

nodes and their location in the network would be the following,

Fig. 9 Block diagram for a Pub-Sub setup with the Subscriber node running on the local

computer.

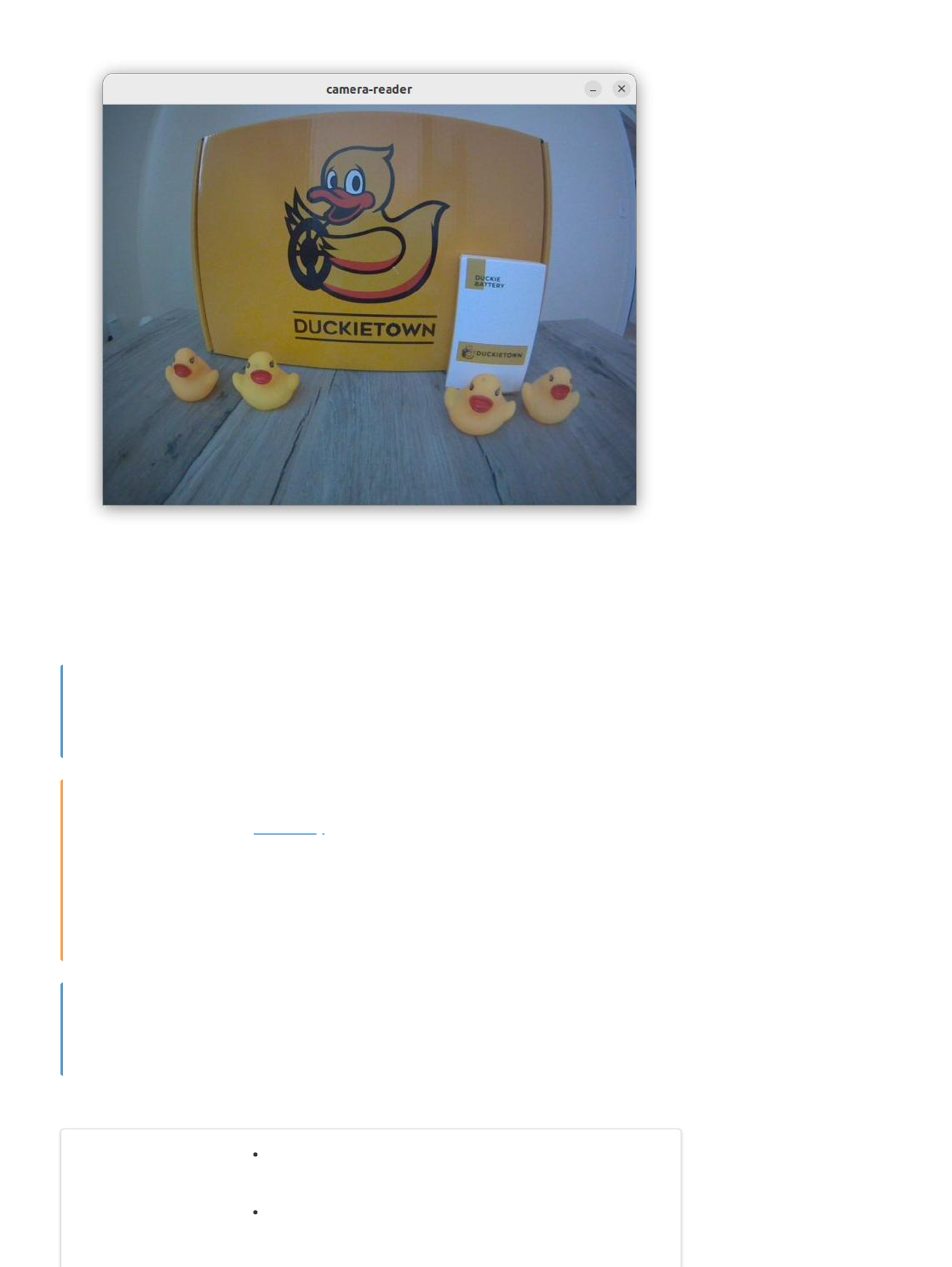

Subscribe to camera

Topic and message type of interest

As you should know by now, ROS allows different processes to communicate with one another by

exchanging messages over topics. In order for two ROS nodes to be able to talk, they need to agree

on a topic name (e.g., camera images), and a message type (e.g., each message is a JPEG image).

In ROS, a topic is identified by a string (e.g., camera/image), while message types are defined using

the official messages description language.

In the case of the camera sensor, the topic used by the Duckiebot to publish camera frames is

/ROBOT_NAME/camera_node/image/compressed, while the message type used over this topic is the

standard sensor_msgs/CompressedImage, and contains the following fields.

where,

header: is the standard ROS header object;

format: specifies the format of the data, for example, png or jpeg;

data: is an array of bytes containing the actual image in the format specified;

Create Subscriber ROS Node

In Create a new Catkin package, we learned how to make a new Catkin package. We will assume that

a catkin package already exists, i.e., packages/my_package/. You can reuse the one we created

earlier.

dts devel build -f

dts devel run -R ROBOT_NAME -L my-subscriber

What you will need A Duckietown robot turned ON and visible on dts fleet

discover

What you will get Learn how to receive camera images from your robot using a

ROS Subscriber

std_msgs/Header header

string format

uint8[] data

We now use our favorite text editor to create the file camera_reader_node.py inside the src/ directory

of our catkin package and add the following content,

Again, we make our node executable,

Define launcher

Similarly to what we did in the section Define launcher, we create a new launcher file

./launchers/camera-reader.sh with the content,

Let us now re-compile our project using the command

Launch the node

We are now ready to run our camera reader node,

#!/usr/bin/env python3

import os

import rospy

from duckietown.dtros import DTROS, NodeType

from sensor_msgs.msg import CompressedImage

import cv2

from cv_bridge import CvBridge

class CameraReaderNode(DTROS):

def __init__(self, node_name):

# initialize the DTROS parent class

super(CameraReaderNode, self).__init__(node_name=node_name,

node_type=NodeType.VISUALIZATION)